Part 3 - Why no one puts the kettle on after EastEnders anymore

From wasted time to repeatable expertise: the skills product managers need to design agentic ai systems based on a real-world 'Wicked Problem'.

Last week wrapped up with a conversation I didn’t expect would leave me thinking about for days. I was speaking with a client who works deep inside the national electricity grid, supplying power to millions.

Now, electricity isn’t always the hottest topic—especially when it drifts into the financial costs of how much AI will transform the sector. But our chat quickly turned into something far bigger with this one point said by the client:

“We can’t plan supply and demand like we used to, Tim. People just don’t behave predictably anymore. Gone are the days of putting the kettle on after EastEnders. And in the next 10 years, we’re set to lose a huge amount of knowledge around complex time-series planning as engineers retire.”

That’s when it hit me—this isn’t just an electricity conversation. It’s a ‘wicked problem’ for energy systems of the world. One where our assumptions about systems, behaviour, and how we plan are collapsing.

This article unpacks what’s really at stake as the world grows more unpredictable with energy consumption. It explores what product managers and business leaders—especially those working with agentic AI—need to upskill in a world where behaviour is non-linear, context is everything, and repeatable expertise, not features, is the currency of value needing creation.

The use case: The death of predicting energy demand

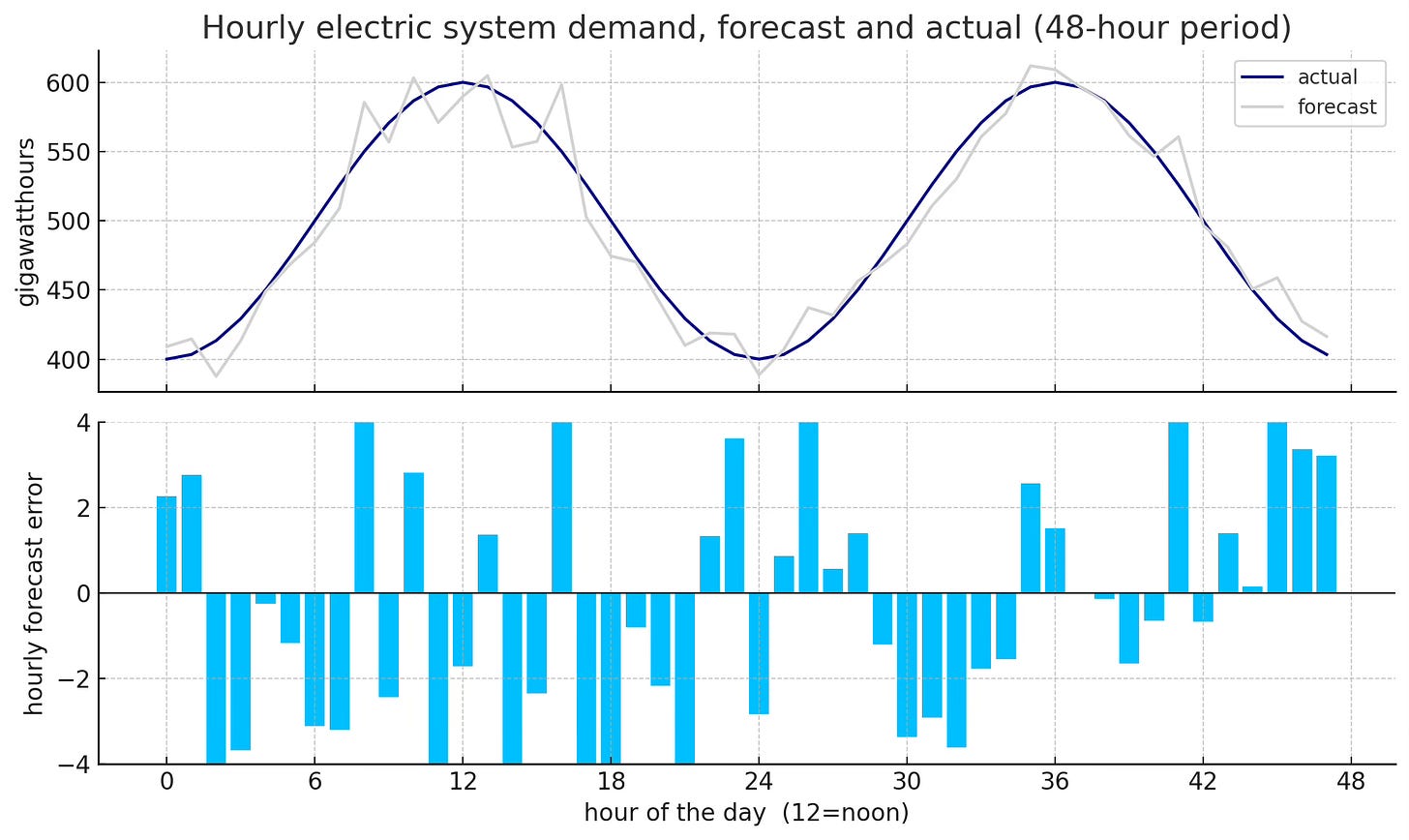

There was a time when electricity demand followed human routine like clockwork.

I remember growing up, my parents at 8:00 PM, would finish watching EastEnders and like millions of other people across the country, conduct a remarkable phenomenum—they clicked the kettle on to make an evening cup of British tea.

The surge was so predictable that the National Grid built models around it. It was even given a name: the “TV pickup.”

But that era is declining now. Living by the TV guide is what the ‘Baby Boomers’ did and still do.

Millennials, GenZ, and Alphas have shifted though. Flexible hours. Streaming shows. Snacking at 10am, and take-out at 9pm.

“We live in a world of fractured timelines and personalised routines.”

The result?

Electricity demand no longer fits a neat curve. It spikes when no one expects it, flattens when it should rise, and increasingly mirrors the messiness of our daily lives.

What hit me though was this: If the grid can’t keep up with unpredictable human behaviour, could agentic AI systems using non-deterministic ‘tree of thought’ be the solution?

The ‘Wicked Problem’ of unpredictable energy use

Electricity systems around the world run on one rule:

what goes in must equal what comes out.

That’s hard enough when demand is predictable. But if human activity no longer follows routine patterns—how do you forecast a grid with unpredictable behaviour and avoid guesswork?

The industry has tried to adapt. They use highly skilled engineers to adjust time-series predictions in real-time, drawing from years of experience.

These create four big issues though:

Expertise are retiring: The engineers who understand these recalculations are nearing retirement, and transferring knowledge takes time.

Cognitive load is increasing: Adapting to human behaviour in real-time requires complex reasoning, not just automation.

Costs are opaque: With platforms like Copilot being distributed across organisations, product leaders can’t see what’s driving compute costs.

When I spoke with the client, they were attempting to resolve this problem, by juggling their own in-house GenAI platform. A sudden rollout of Copilot by IT, and internal cost spreadsheets pulled manually from Azure logs just created new problems to make sense of AI impact.

This isn’t just about electricity anymore. It’s about how the energy industry as a whole will need to redesign systems to work in a unpredictable world.

Why Agentic AI might provide a solution

When I asked the client how they were trying to manage this unpredictable world of electricity demand, he said something alarming:

“We’re running critical infrastructure on assumptions that don’t hold anymore. And we’ve got 50,000 hits on a GPT tool each month, but no real handle on cost, context, or control.”

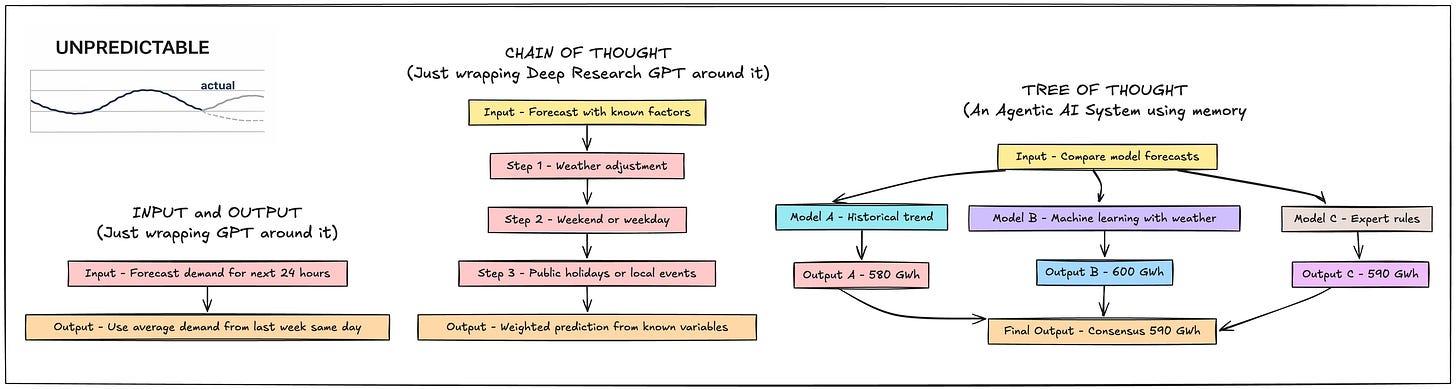

That’s when we got into how Agentic AI might actually help. But not the “throw a GPT wrapper on it” kind of AI. I mean real agentic systems — built to reason, reflect, and adapt across unpredictable complex problems.

Solving complexity with “Tree of Thought”

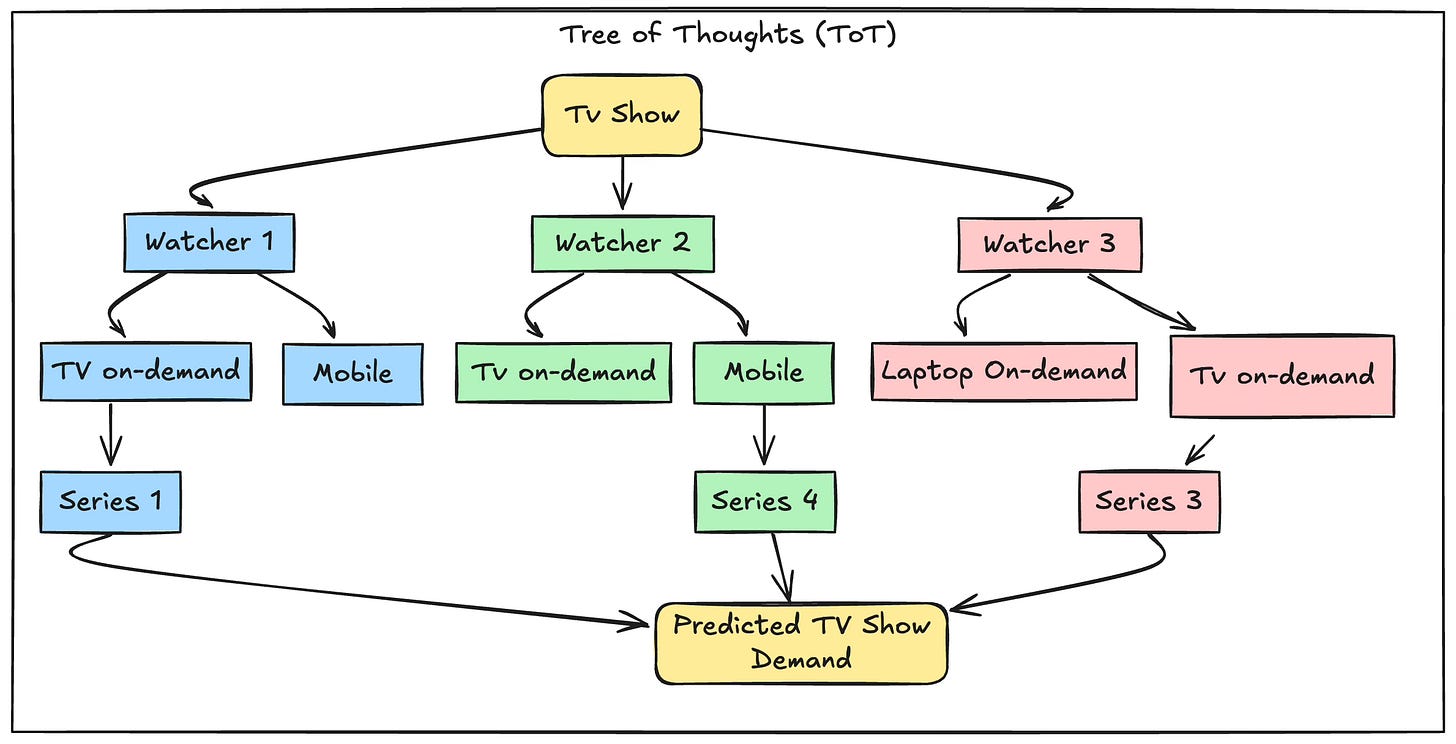

We’ve been exploring a powerful design pattern in templonix when it comes to complex solutioning.

It’s called Tree of Thought (ToT).

I describe it as simply as:

“Imagine the agent is like a tree. The trunk is the initial problem. Each branch represents a possible reasoning path. At the end, the branches merge again into the trunk — giving you the most balanced, assured answer.”

Here’s how it works in practice:

Each branch is powered by a different LLM (or the same LLM with different system prompts).

Each step down the branch is a chained prompt, reasoning in stages.

The trunk collects the outputs, checks for consensus, validates edge cases, and proposes the most trustworthy decision.

This setup doesn’t just explore one line of thinking. It explores many, in parallel. It mimics how human experts approach complex decisions — by evaluating multiple angles and reconciling them.

Returning to my client, this would mean running different agents that model distinct ‘engineer perspectives’ or ‘forecasting methods’. One might model based on weather trends. Another based on historical pick-up patterns. Another might reference renewables or geopolitical events.

At the end, the branches come back together. The system compares all paths and delivers a recommendation—not just a prediction, but a justified answer.

You don’t need 5 LLMs — You need 1 Agent with good prompts

The client had originally assumed they’d need to use multiple language models for different scenarios.

But that’s not strictly true. This is another example of agentic AI systems getting lost in the hype. To clarify. You can use a single LLM if you’ve got strong system prompts and tight governance.

This is a skill designers and product teams need to learn fast:

how to shape LLM behaviour without switching models.

This is important in the design process of agentic ai systems, as you need to:

Design persona-specific prompts with clear goals, tone, and context

Define strong system prompts that act like guardrails, anchoring what the agent is and isn’t allowed to do

Create domain-specific memory so the agent knows what matters in energy vs finance vs healthcare

Buy designing the agentic ai this way, we create stateless calls to the LLM that always return the same output, because the agent identity and context is so well-defined—goodbye hallucinations.

That’s a game-changer not just fore energy, but healthcare, finance, and any other highly regulated industry.

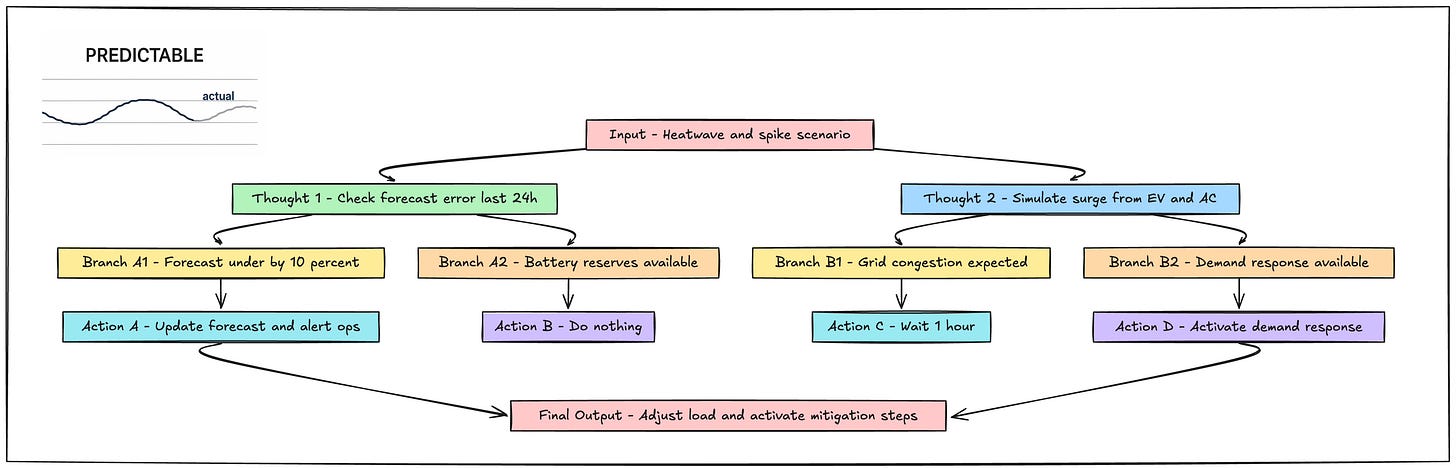

The ‘Magic’ to building trust with users - Reconciliation Layer

Back to Tree of Thought. It’s not just about the branches — it’s about the return to the trunk.

This layer is where you build trust with users:

You compare outputs from the different paths

You apply validation checks: What confidence score? What sources were used?

You escalate to a human if ambiguity remains

This is what makes ToT useful for ‘wicked problems’ from my experience. For our client dealing with electricity demand, it gives their engineers a reasoning trace, a logic map, and a fallback plan. You’re not just getting a yes/no. You’re seeing how the answer was formed — and where it might be wrong.

Why Product Managers need to learn non-determinism

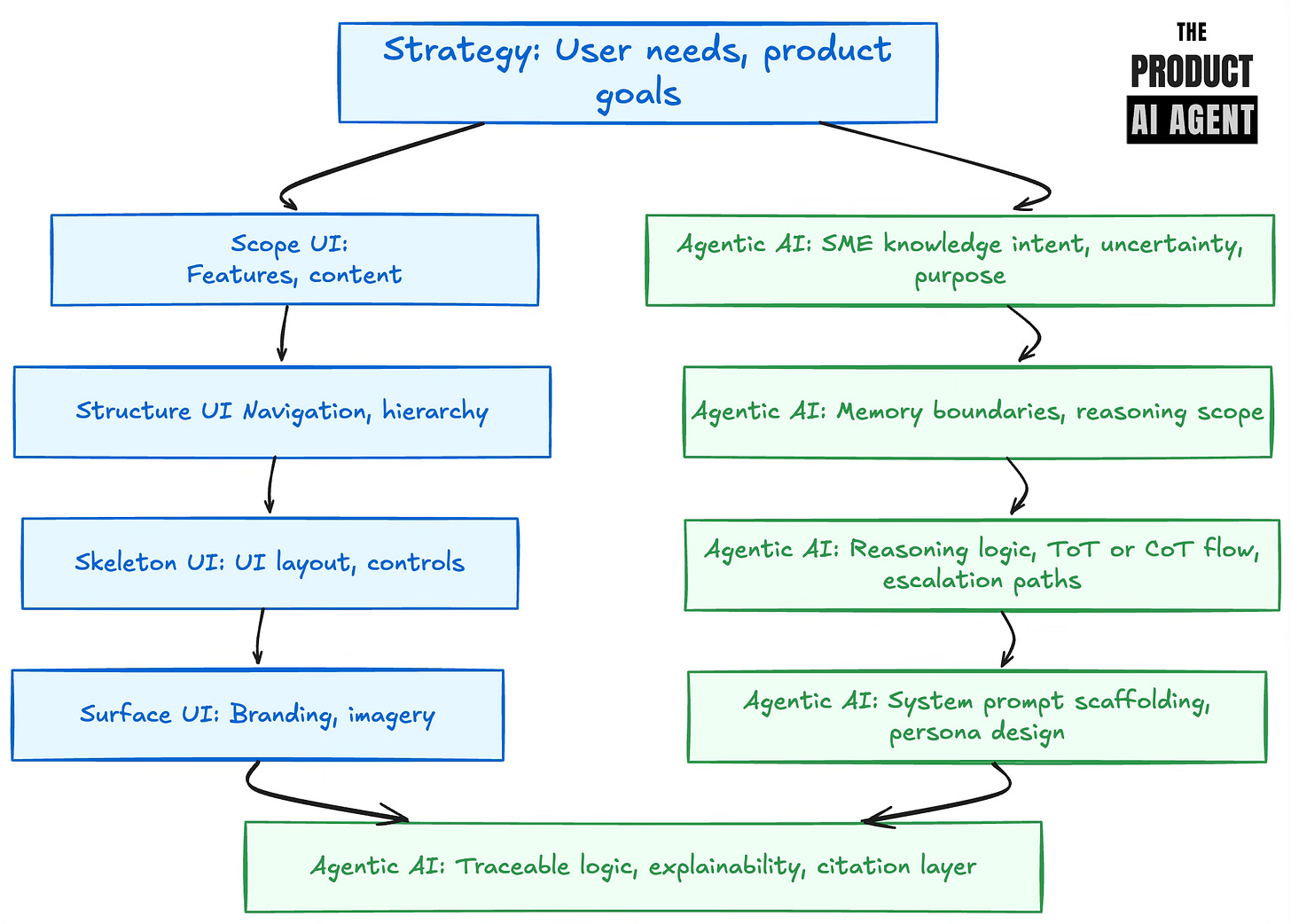

As I discussed in part 2, the real skill needed for agentic AI systems isn’t sketching screens—it’s codifying knowledge and making it repeatable.

If you’re a product manager stepping into this space, here’s the hard truth: your approach to getting to a roadmap won’t save you.

Most product managers today are still running traditional software development lifecycle (SDLC) playbooks built for deterministic systems:

We plan features

We write user stories

We design workflows

We create screens

We scope features into releases

“But what happens when users don’t follow the flow? When behaviour is emergent, not engineered?”

I’m now coming to terms this experience of agentic AI design is changing my role as a product manager.

I’m no longer a feature owner.

I’m a systems architect of cognition.

I’m not mapping the user journey—I’m codifying how experts think, decide, and act under pressure, to define my requirements for agentics.

These are the skills I’ve come to develop based on experiences with templonix:

Translate subject matter expertise into repeatable logic

Define agent behaviours with system prompts, not wireframes

Collaborate with designers on cognitive UX, not UI

Cost-model token usage, not just dev hours

Architect escalation paths, memory retention, and reasoning flows

Agentic AI requires new thinking, new language, and a different kind of intuition. And product managers who can’t make that leap? They risk shipping tools that can’t be trusted—or worse, never leave the lab.

The upskilling Product Managers need

You don’t need to be a data scientist to work in this space.

But you do need to evolve. Fast!

Here’s where I’d advise product managers to start if designing agentic AI systems:

🧠 Learn Tree of Thought - Understand how branching logic can help model complex, multi-perspective decisions.

📚 Study Prompt Engineering - System prompts are the new product spec. Learn to define tone, guardrails, and persona logic in language, not just design systems.

🧾 Build Knowledge Architecture - Partner with domain experts to extract how they think. Turn that into memory blueprints, not just documentation.

💸 Cost Model Everything - Tokens, retries, latency, memory calls—know the financial impact of every design choice.

🔁 Design Reconciliation Layers - Agentic systems must show their work. Build validation logic, traceability, and fallback escalation from day one.

This isn’t about adopting new tools. It’s about adopting a new mental model of how intelligent systems need to behave.

Final Thoughts

The kettle-after-EastEnders moment is more than a cultural relic. Its a symbol of a world we could predict and plan for.

That world is ending. Agentic AI offers a way forward—not just to automate tasks, but to capture expertise, reason through ambiguity, and scale judgement under pressure.

But that starts with the people who design the systems.

If you’re a product manager, now is the time to upskill. Not in frameworks or figma files—but in cognitive architecture, memory modelling, and reasoning design.

“The real work isn’t building features anymore. It’s building thinking systems we can trust.”

💬 Found this helpful? Share your thoughts, ask a question, or challenge the thinking in the comments.

Thanks for 🔁 Restacking and sharing.