Part 2 - The top skills Product Managers need to deliver live Agentic AI production systems

What you need to know to build repeatable expertise at scale in the agent economy

This week I was catching up with Lizzie over WhatsApp. She’s one of the sharpest product minds I know — usually the one giving me tips.

But this time, she was stuck.

“Tim, can we chat? Everyone’s going on about Agents again. Nothing fits our usual software lifecycle. We don’t know what we’re actually building. I’m lost.”

Lizzie’s team builds chatbot tools to support marketing agencies by deploying them into channels like WeChat, WhatsApp, and Telegram. But when the brief shifted to “agentic AI,” things broke down.

Design didn’t know what to sketch.

Data didn’t know what to prep.

Leadership approved the plan — but had no idea what it involved.

So we swapped voice notes. Here’s what I told her:

“If you're a PM working on agentic AI, it’s not about knowing the latest model or dragging flows in Make.com. This isn’t about prompts. It’s about building knowledge systems.”

Agentic AI demands a shift: The real skill isn’t sketching features, it’s codifying knowledge and making it repeatable. That’s why old lifecycles are failing Lizzie — they were built for UI, not intelligent knowledge management.

If you're a product manager, like Lizzie, wondering how to move from user stories and workflows to strategic systems that scale knowledge — read on.

The social media trap: Agentic AI in the wrong context

Before we get into the core skills product managers need, let’s address the noise — especially on LinkedIn.

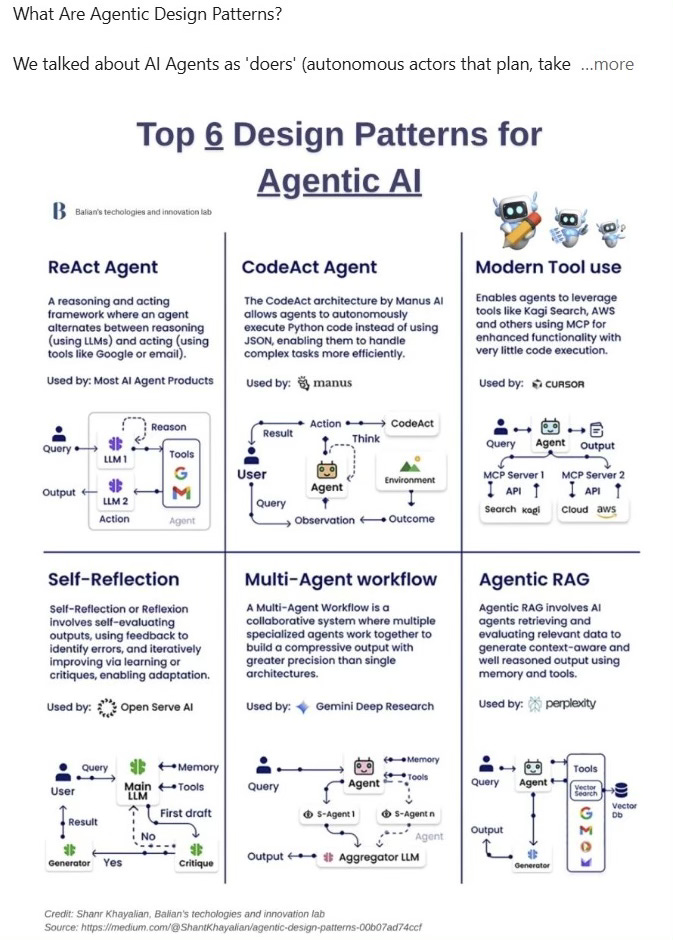

Lizzie sent me one of those “Top Agent Patterns” posts. It had shiny diagrams with names like ReAct, CodeAct, Toolformer, and Self-Reflector. Lots of arrows, icons, and smart-sounding loops.

But here’s the thing. They look like systems, but they aren’t agents.

They’re prompt patterns for LLMs.

Take ReAct, for example. This is just a reasoning style. It guides an LLM through a loop of thought → action → observation → reflection. It doesn’t manage tools, recover from failure, or — crucially — handle memory.

That’s why these posts are misleading. They’re not wrong — they’re just incomplete.

And in product, being incomplete is dangerous. It leads to PoC paralysis or worse: launching something no one can trust.

Real agents don’t live in mockups. They live in systems with guardrails, workflows, memory, audit logs, and cost controls.

I told Lizzie that. Then, I walked her through the five core skills that product managers actually need to build agentic AI systems that work in the real world.

The 5 skills needed to become an Agentic AI PM

Before we get into the skills, I told Lizzie something important:

“Forget everything you know about building software. Agentic AI doesn’t follow the old rules.”

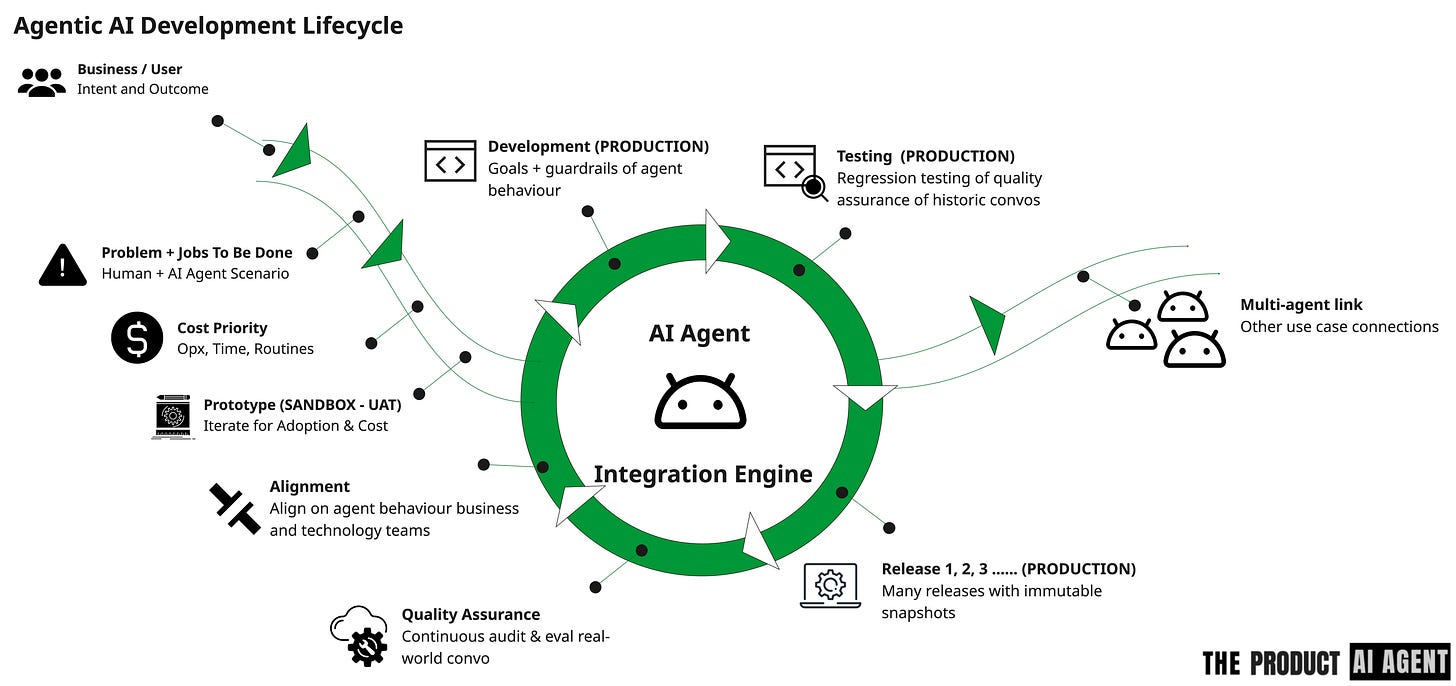

We’re dealing with a new classification of software — one that follows what I call the Agentic Development Lifecycle.

Unlike traditional systems, agents operate non-deterministically. That means even with the same input, outcomes may vary. You’re not building fixed workflows — you’re designing systems that adapt, reason, and evolve in real time.

In practice, this changes everything.

Where SDLC is linear and predictable, agentic development is continuous, iterative, and lives in production from day one.

The Software Development Lifecycle

The Agentic Development Lifecycle

With that shift in mind, here are the five core skills every product manager needs to build agentic AI systems that actually work.

1. Understand that knowledge is now infrastructure

In the agent economy, knowledge isn’t content — it’s infrastructure. Just like electricity powers machines, structured knowledge powers agents.

This shift pulled me back into a previous profession using ‘information architecture’ (IA) and ‘system thinker’ - making websites. To build agentic systems, we must first extract and codify how subject matter experts (SMEs) make decisions.

Tools like ‘job task mapping’ and ‘mental models’ help us capture the repeatable expertise. I call it creating a “carbon copy” of what someone knows so an agent can do the same.

Think:

“What should a sales agent know from our playbook?”

“What knowledge does procurement rely on daily?”

When you design this flow of knowledge across tasks, you're not just building an agent — you’re building the knowledge infrastructure it depends on.

2. Use ‘Jobs To Be Done’ to define memory requirements

I’m seeing a common misconception across social media: people think the LLM is the “brain” of an agent.

It’s not. It’s just the voice.

Let’s get the facts straight. LLMs are stateless. They don’t remember much — which means they can’t learn, adapt, or operate in context without memory. And memory is what makes an agent AI system.

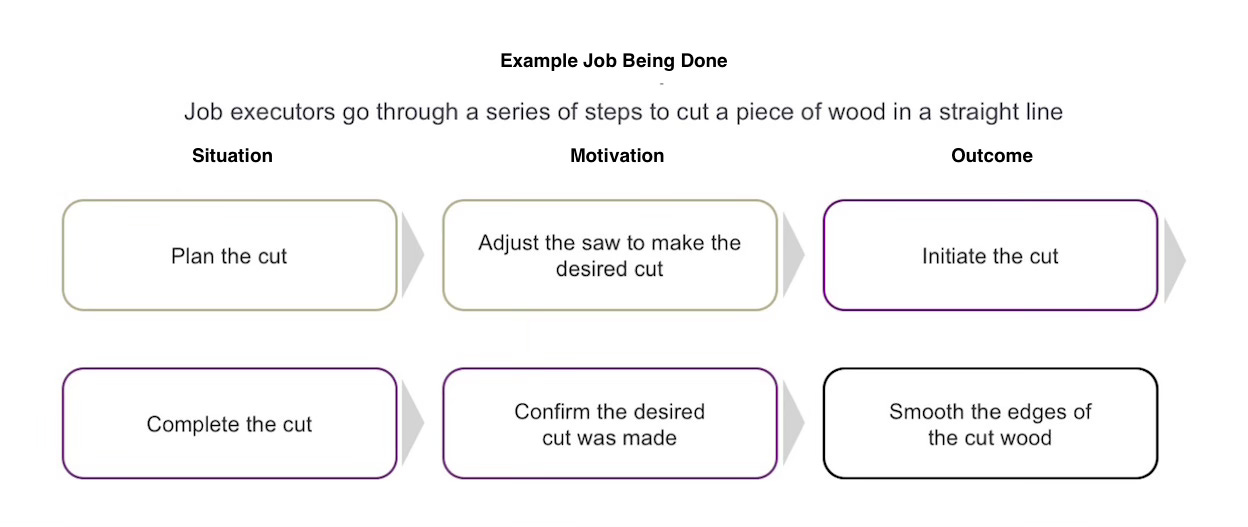

To design memory effectively, I find Clayton Christensen’s Jobs To Be Done (JTBD) framework critical. Originally built for understanding customer behaviour, JTBD helps us capture the goals, skills, and knowledge required to perform a job — exactly what an agent needs to store.

JTBD helps you model the goal-state, not just the workflow of an SME. It helps PMs answer. It’s the simplest way I know to structure memory with purpose:

Situation - Identify which data (knowledge) is useful (vs noise).

Motivation - Design prompts (skills) that clarify the tasks.

Outome - Define performance criteria (prevent hallucinations) for the job to be done well.

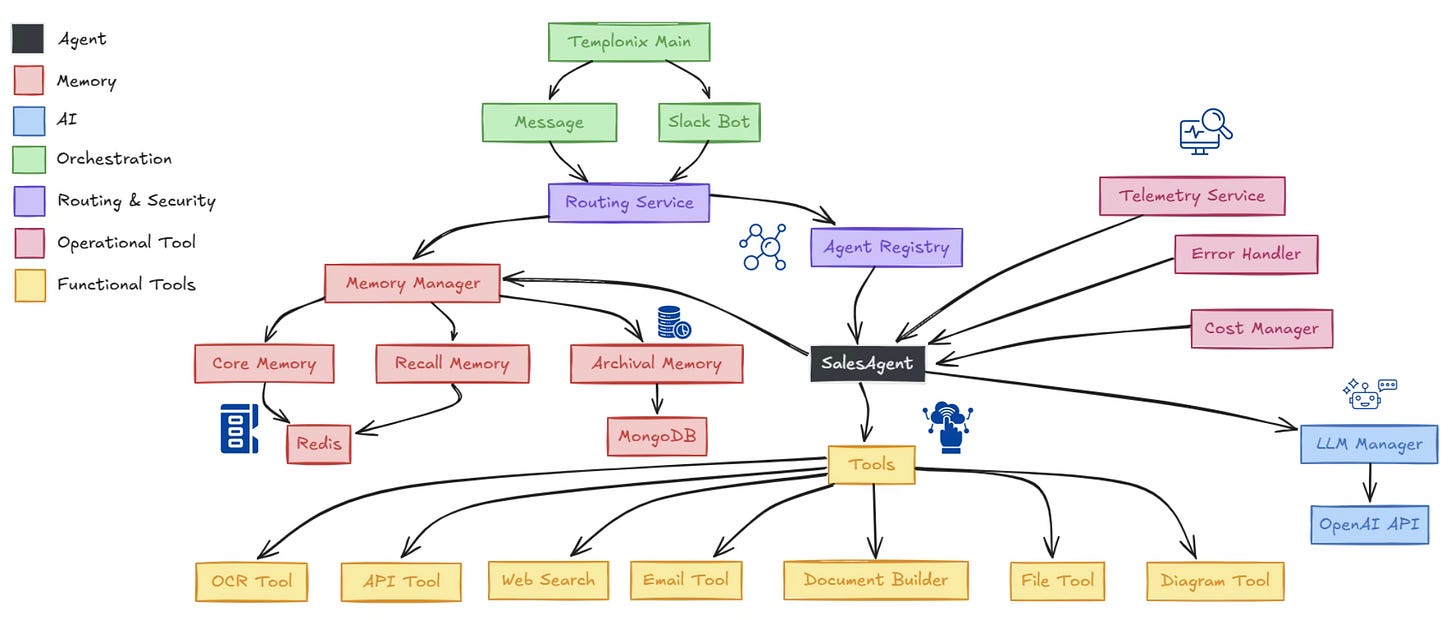

Once we understand what knowledge and skills we need to store, we can define three memory types:

Core Memory (Short-Term): The current task and its context.

Recall Memory (Medium-Term): Past events and decisions that inform the present.

Archival Memory (Long-Term): Patterns, exceptions, and learnings over time.

Once memory is in place, technically, we prevent the agent from being ‘flooded with knowledge’. This means we can encode meaningful knowledge, which helps us manage agentic system costs.

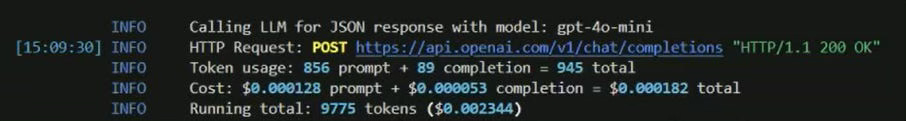

3. Cost model the lifecycle of your Agent

In my 20 years of building digital products, one lesson has stuck:

“If you can’t show the financial impact, your product won’t get funded.”

The same applies to agentic AI.

Agents aren’t just cool automation demos — they’re microeconomic systems. They consume something new - tokens, unknown APIs triggers, and loop through reasoning cycles. Each has a cost implication.

Most PMs aren’t used to thinking in token budgets or inference cycles. But if you don’t, you risk building something clever that’s too expensive to scale.

That’s why I use rapid, real-world prototyping — paired with a cost assurance window — to test value and risk upfront. We use this in Templonix and model cost across every agent iteration to track when it starts paying for itself.

These are some of the questions I ask to determine whether my agent is going to break budgets or build profits:

What’s the cost per reasoning cycle?

How often does it hit APIs — and are they external (more costly)?

What happens when memory usage scales across users?

“A product manager who can’t talk about financial metrics is going to fly blind and deliver a flopped agentic solution.”

Here’s what I track in every agent build:

Token consumption per task

Latency and reliability of external tools i.e. API calls to a CRM

Total cost of ownership (TCO) over the life of the agent

Net present value (NPV) of the time AI Agents free up vs SME

Return on investment (ROI) of an AI Agent compared to an existing FTE cost

Finance isn’t a blocker — it’s a design input. Treat it like one.

4. Build governance in from ‘Day Zero’

When you design agents, it’s not just for workflows — it’s for creating digital actors.

These actors make decisions, access sensitive data, and trigger actions. That’s why governance can’t be an afterthought — it must be designed in from the start.

Think of governance as the agent’s operating constitution. It defines what the agent can do, with whom, and under what conditions.

“This is about safety, trust, and control — and it’s your job as a PM to lead it.”

The shift here is from permission-based thinking to policy-first architecture.

I start by working with SMEs to define the rules of the job:

Who can use the agent?

What input formats are allowed?

What systems can it access?

When should it escalate to a human?

This is the essence of the Policy-First Guardrail Pattern — separate policy from enforcement. Write clear rules legal and IT can review, then get the technical team to implement logic to enforce them before the agent goes live.

Key tips to get you started

Start with a whitelist (define what’s allowed, not what isn’t)

Make policies human-readable

Log every action for audit and learning

Fail safe — deny by default

Layer your checks — never rely on just one

Articulating your agent’s permissions, boundaries, and escalation triggers before any reasoning loop begins means you’re building governance right.

5. Know when the Agent needs to explain itself

Autonomy isn’t the point of an agent — outcomes are. And outcomes only matter if people trust the agent’s judgement.

Too often, explainability is treated as an afterthought. But if your agent can’t justify its actions — it’s going to be a liability.

Explainability in agentic systems comes down to three core principles:

Predictable patterns (captured in graphs)

Ontologies that define reasoning

Human-in-the-loop as a designed feature

This is why IA is returning, as I discussed in core skill 1. This method helps us define a taxonomy of ‘entities’ and ‘relationships’, and generates requirements for graphs, ontologies, and human-in-the-loop.

Let’s break these down.

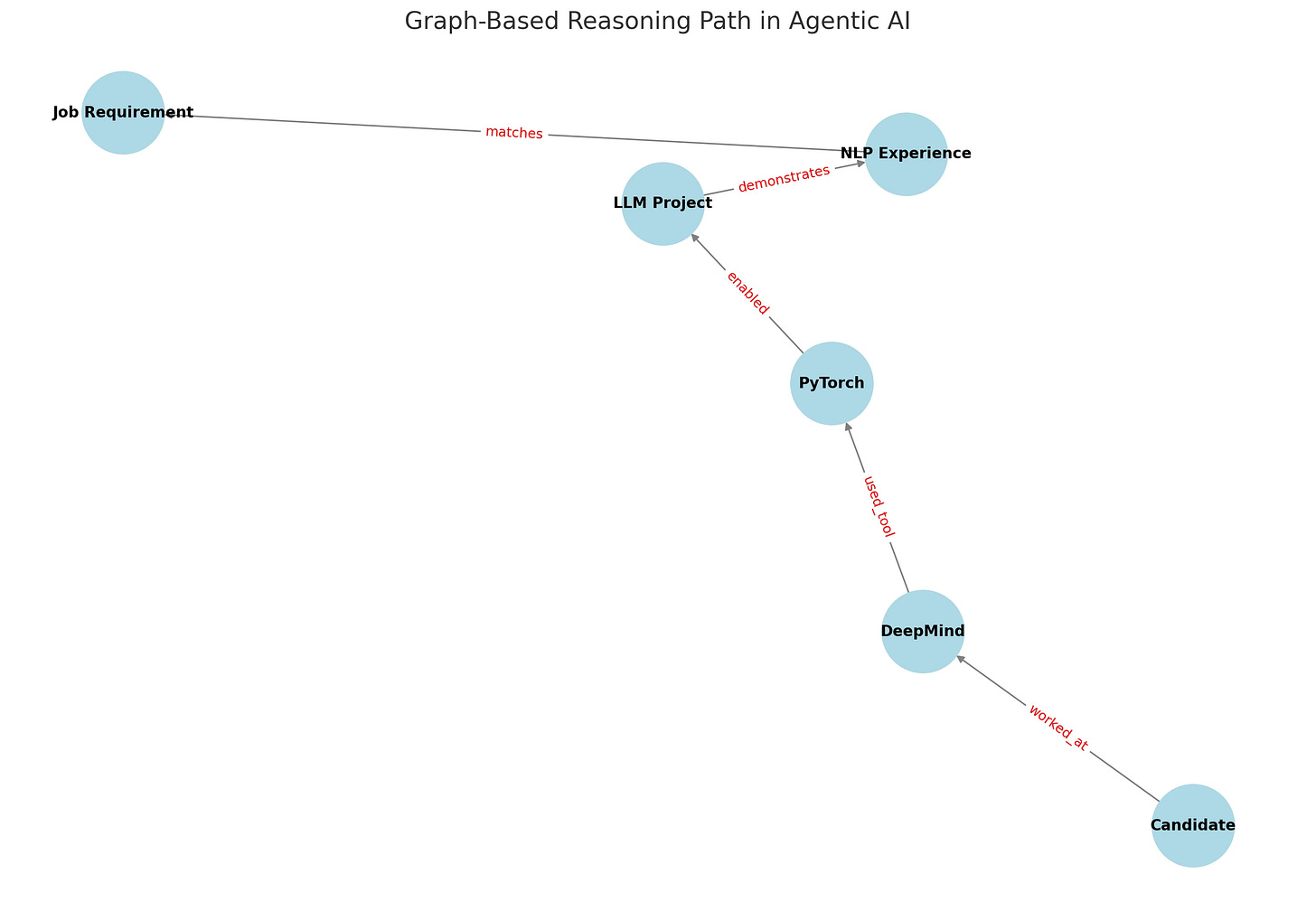

1. From guessing to grounding: Why graphs matter

LLMs offer ‘guestimation’, not reasoning. This is why they hallucinate.

Agents, however, need structure — a navigable map of how knowledge connects. That’s what graphs provide.

Take a recruiting agent screening AI developer candidates. A human recruiter might ask:

Do they know Python?

Where do they use it?

Was it production-grade or hobby work?

How recent was the experience?

An agent can’t prompt its way through this. It needs structured reasoning. Graphs store those relationships, allowing the agent to explain:

With a graph-backed memory, the agent can trace its logic and escalate uncertainty when confidence drops.

2. Ontologies define the agent’s thinking

Graphs work because they follow ontologies — schemas that define how entities and relationships interact.

Let’s see how this works for our recruiter example:

Entities: Candidate, Job, Skill, Employer

Relationships: HAS_SKILL, WORKED_AT, REQUIRES_SKILL

Logic: “If Hugging Face + PyTorch, infer NLP expertise”

This structure gives the agent the power to reason, not just retrieve. And most importantly, it allows for auditable decisions — something executives, regulators, and operational teams all need to see before an agent can be trusted in production.

3. Human-in-the-Loop Is a Feature

Agents must know when to ask for help:

“I’m unsure — here’s why — can you assist?”

Whether ititss a Slack handoff, an inbox report, or a workflow trigger, designing escalation pathways from the start is imperative. The key is giving the agent logic it can surface when things get unclear.

Explainability isn’t a bonus. It’s how agents earn trust.

What you can do now?

If you're a PM looking to lead in the agent economy, the shift starts here:

👉 Think in systems, not features.

Here’s how to begin when someone asks, “Can you build me an AI agent?”

Map repeatable expertise — Find where SMEs apply consistent judgement.

Apply JTBD — Use it to define memory needs and structure the agent’s goal-seeking behaviour.

Learn to model costs — Tokens, latency, NPV. Agents are economic systems — finance matters.

Sketch the memory blueprint —

Core: immediate task

Recall: relevant history

Archival: long-term trace

Use graphs for reasoning — Agents must explain decisions. Build relationships, not just prompts.

Want to go deeper?

At Synaptix, we train PMs to designers about agentic AI systems using our Templonix© framework — from cost modelling and governance to building prototypes to test for scale and adoption.

👉 Book a discovery call if you're ready to build with impact.

Let’s turn product thinking into AI system design.

Until next time,

Tim

💬 Found this helpful? Share your thoughts, ask a question, or challenge the thinking in the comments.

Thanks for 🔁 Restacking and sharing.

Fascinating. Thanks for putting this all together so clearly. Looking forward to reading more from you.