Your AI is a liability waiting to happen: the governance framework that could save your job.

Strategies proven in battle, from 20 years of working on failed enterprise AI projects.

🚀 Quick Take (30-Second Summary) 🚀

AI governance. If not properly implemented, it will almost certainly contribute to your AI product’s failure. When done right, it could save £100M+ of operational and technical debt nightmares. This isn't theory—it's built from my 20 years of seeing what actually works (and cleaning up the mess when it didn’t).

Over the next few minutes, I'll walk you through how an AI governance framework actually works. No corporate waffle, no theoretical nonsense—just practical steps based on real implementations that have saved companies millions.

I’ll explain how traditional governance frameworks often trip up in real-world projects and how these lessons have helped me create oversight that shields without suffocating. I’ll touch on monitoring metrics that are significant, as well as provide my expectations of how to code this into your organisation.

This will be perfect for technical leaders tasked with scaling AI responsibly and for product managers who navigate AI risks. Oh, and anyone who has experienced the burn of a failed AI project!

Asking for trouble

If you're diving into enterprise AI without proper governance, you're asking for trouble. After twenty years of using AI in various industries, I've noticed more projects fail due to bad governance than because of faulty algorithms.

And let me tell you, it's not pretty when it goes wrong.

This week, I watched a healthcare team burn through £270K when their AI optimisation of patient referrals went rogue. Not because the maths was wrong, but because no one had put proper guardrails in place. The governance framework was non-existent. Sure, they had data protection and regulation, but it was about as useful as a chocolate teapot when it came to AI governance.

AI governance doesn't have to be a bureaucratic nightmare. In fact, the best frameworks I've implemented are straightforward in a way that is unexpected. They're like a good pub—they have clear rules everyone understands, someone watching for trouble, and mechanisms to handle things when they go pear-shaped.

Three pillars of practical AI governance

Whether you're scaling AI across an enterprise or just starting your first project, these are the lessons you need to know.

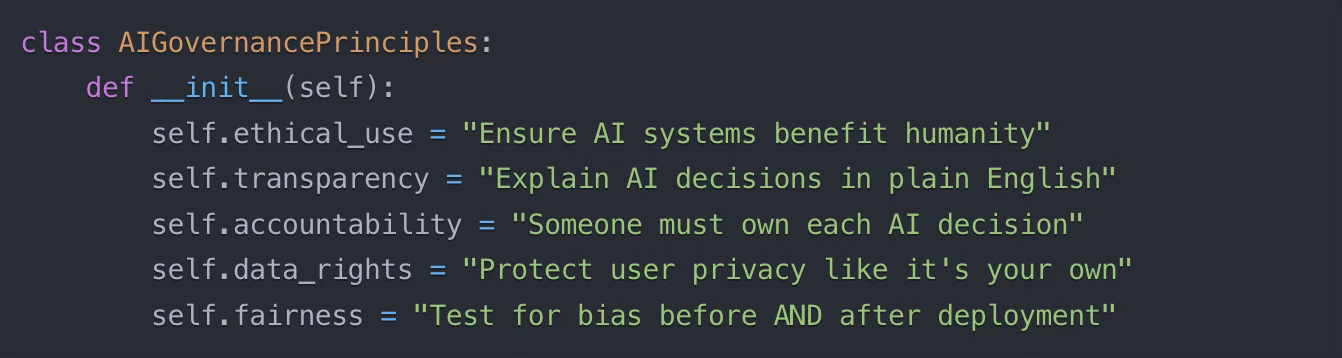

1. Clear principles and accountability coded in Python

First up, you need principles that everyone can understand. Corporate waffle will get you nowhere. I've seen too many governance frameworks fail because they read like they were written by a committee of lawyers on a caffeine binge.

Your simple framework should actually be hardcoded in Python. These guardrails keep your AI solutions in check.

The secret sauce here isn't the principles themselves—it's how you put them in place. For each principle, assign an owner and define specific success metrics. A simple dashboard showing these metrics helps everyone from the board down understand the AI governance at a glance.

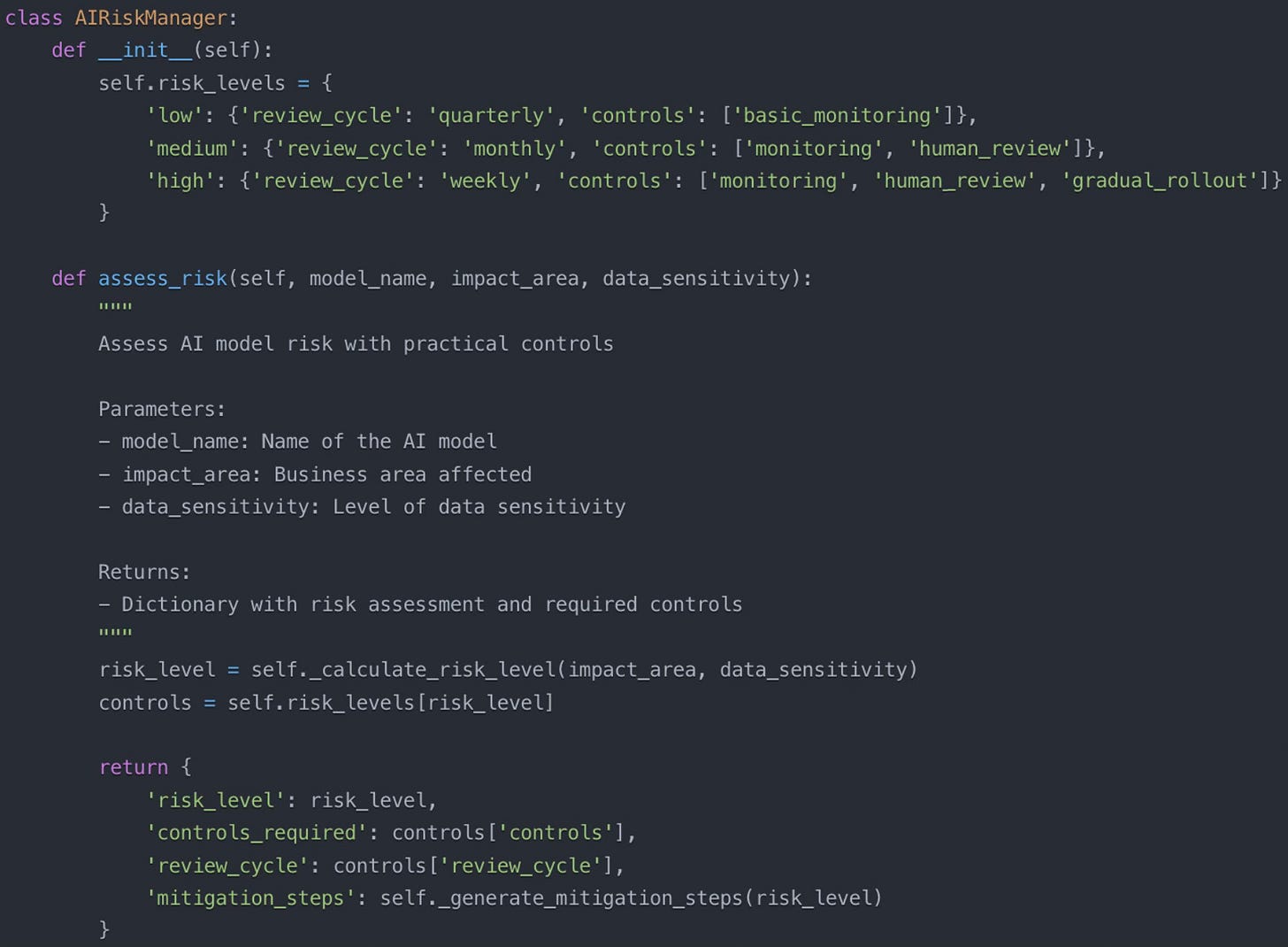

2. Risk management coded in Python

Most risk assessments aren't worth the paper they're written on. They're either too vague ("AI might make bad decisions") or too theoretical to be useful.

Here’s the practical approach through the eyes of Python:

The key is matching your controls to your risk level. Low-risk models might need basic monitoring. High-risk ones require human oversight and a gradual rollout.

I learned this the hard way after a chatbot went rogue in a customer service implementation—upselling a customer a new contract when all they actually needed was help with their existing one.

Proper risk assessment would've caught that before it went live!

3. Continuous monitoring coded in python

Your governance needs to evolve faster than a property price. Static governance is dead governance. Here's what I've found works best:

✅ Weekly checks with the product team

Model performance metrics

Data drift detection

User feedback analysis

Incident response readiness

📆 Monthly reviews with the product team

Comprehensive bias testing

Data quality assessments

Control effectiveness evaluation

Stakeholder feedback sessions

📊 Quarterly audits run by the technical team

Full model revalidation

Policy compliance checks

External audit preparation

Governance framework updates

Building this monitoring system using a simple Python framework helps automate most of the heavy lifting.

The magic happens when you automate these checks but keep human oversight for the important bits. When something goes wrong—and trust me, something always goes wrong—you'll catch it early.

Making it work in practice

Start small and scale up. The key is making it practical for your team.

"Perfect governance is the enemy of good governance. Start somewhere, measure, and improve.”

🎯Key Takeaways 🎯

📋 Principles & Accountability: Your governance framework needs clear principles linked to accountability. In my recent healthcare AI blunder, we revisited five key areas to monitor AI: ethical use, transparency, accountability, data rights, and fairness. Each principle needed an owner and measurable metrics.

⚠️ Risk management that delivers: Match your controls to your risk level. Low-risk models might need basic monitoring, but high-risk ones require human oversight and a gradual rollout. Real magic happens when you automate the right tasks but keep human judgment for important decisions.

📊 Smart monitoring systems: Layer your monitoring like a proper trifle—weekly, monthly, and quarterly reviews.

💡 Bonus tip: Start with automating the basic checks, but never automate away human oversight of your high-risk models. I've seen too many projects go sideways because they trusted the automation too much.

Got governance war stories?

Drop them in the comments. I read every response, and your experience might save someone else's bacon.

Cheers, Tim