The CPO's Playbook: Working with architects to ensure AI Data Infrastructure has great impact

From data silos to domain expert datasets to support the modern business on their road to AI goldmines.

🚀 Quick Take (15-Second Summary) 🚀

Listen up, product leaders. After a week at AI summit in Dubai, I've seen the approach to building data foundations being misunderstood.

Building AI without proper data infrastructure is like trying to build a skyscraper on quicksand. I'm deep into building domain-specific datasets on a project, and creating a strong data foundation is a must.

Organise your messy data into datasets to prepare them for training LLMs and unlock an AI goldmine.

The $1M Question: Why your AI projects keep failing

Have you ever tried to cook a gourmet meal with ingredients all over the place? Some might be locked away, while others could be mislabeled. That's what I saw this week at the AI summit with most companies.

Companies were leaping to implement AI, whilst overlooking a fundamental principle. How should data be engineered and structured around a domain?

I've spent two decades helping organisations with this sort of mess. Let me tell you - the secret to great AI at scale isn't the latest fancy algorithms. It's about having your data house in order.

Getting Your Data Ducks in a Row

Here's a proper shocker for you - 80% of AI projects never make it to production. And you know what's the biggest culprit? It's not dodgy algorithms or budget constraints. It's messy, disorganised data infrastructure.

After watching countless companies face-plant their AI initiatives, here's my approach based on lessons learned.

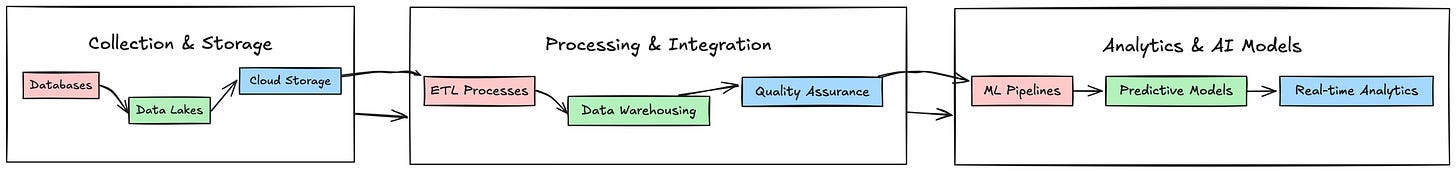

The three pillars of solid data infrastructure

Let's cut straight to the chase. Building technology infrastructure isn't rocket science, but it does need proper architecture.

My recent project trenches are yet another repeat of why starting with AI is flawed. It's the story behind the data that counts.

I've boiled down my lessons learned into three core pillars that determine AI success.

Get these wrong, and you're essentially wasting money. Get them right, and you'll build an AI powerhouse that actually delivers results.

1. Collection & Storage

This is your cellar, where you keep all the good stuff. You need

- Robust databases that can handle the load.

- Data lakes that can store everything from structured data to raw text.

- Cloud storage that scales with your needs.

2. Processing & Integration: This is your bar setup. It’s where the magic happens:

- ETL processes that clean and prepare your data.

- Integration points that bring different data sources together.

- Quality checks that ensure everything's up to snuff.

3. Analytics & AI Models: This is where you serve up the goods:

- Machine learning pipelines that can handle real-time processing.

- Predictive models that actually deliver value.

- Monitoring systems that keep everything running smooth.

Real Talk: A Case Study that actually worked

Let me tell you about my current healthcare project I’m working on. They are drowning in transaction data - millions of patient records spread across different systems, all speaking different languages.

Sound familiar?

“Our approach started with - ‘What problem is the data solving, to enable the data insight story to come through?”

This approach (after many user and data journeys - check out my workshop for this) enabled how we needed to approach a distributed architecture ready for AI. In this instance, Apache Kafka was needed to provide real-time streaming and Delta Lake for storage for fast retrieval.

The Result?

A ‘minimum lovable AI’ model that’s enabled 40% faster decision-making on patient service design for chronic home-based care.

The biggest hurdle? Breaking down data silos and the teams wrapped around them.

Breaking down those pesky data silos

You know what kills AI projects faster than anything else? Data silos. They're like having separate pubs that don't share their beer lists - makes no sense, right?

Here's how I've been tearing this down:

1. Sort Out Your Governance:

Create clear rules about who can access what data and how it should be used. It's like having a good bouncer - keeps the wrong people out while making sure the regulars get what they need.

2. Build Cross-Functional Teams:

Get your data engineers and scientists talking to your business folks. Magic happens when everyone's speaking the same language.

3. Automate What You Can:

Use proper tools to keep data flowing smoothly between systems. The less manual intervention, the better.

Making it scale ready

If you're serious about AI, you need awesome data infrastructure that can grow with you.

Here's what I’ve learned from multiple projects to achieve this:

1. Go Cloud-Native:

AWS, Azure, Google Cloud - pick your poison. You need to make sure you're using cloud services that can scale up and down as needed though, or it’s a big bill!

2. Containerize Everything:

Docker and Kubernetes have been my best mates over many deployments. Why? They make it dead easy to deploy and scale your AI workloads.

3. Think About the Future:

Build with growth in mind of your users. What works for your current data volume might not cut it next year based on behaviours.

✅ Key Takeaways ✅

Your AI is only as good as your data infrastructure. Get that sorted first, then worry about the fancy AI toys.

🚀 Break Down Those Silos - No more isolated data pockets. Get everything talking to each other properly.

💡 Scale Smart, Not Hard - Build for the future, but start with what you need today. You can always add more later.

I'd love to hear about your data infrastructure challenges.

Drop a comment below or give me a shout - I read every response and love a good chat about this stuff.

Until next time,

Tim