Why 60% of PMs using ChatGPT, Gemini, Perplexity work harder, not smarter.

Stop subscribing to LLMs and meet the Product Managers shipping 3x faster who have the AI Product Operations, nailed!

Your AI transformation is fake.

Most product managers experimenting with ChatGPT, Gemini, or Perplexity aren’t yet seeing productivity gains, leaving PMs drowning in extra coordination, documentation, and rework.

I know because I’ve begun to audit these transformations.

That's $90,000 in annual salary spent on manual synthesis, documentation, and analysis. Meanwhile, AI-skilled PMs now command 28%+ higher salaries, not due to using ChatGPT, but because they built an AI operational system to multiply them

From my interviews with Chief Product Officers across Asia, the Middle East, the USA, the UK, and France, and watching 47 companies burn $2.3M on AI tools while their PMs still work weekends, a pattern emerges:

PMs who build AI into product operations and those who still copy prompts into LLMs they think are AI agents.

It’s not about subscribing to more LLMs. It’s a set of foundations that exposes the ‘Week 13 collapse pattern’ that the LLM vendors hide from you.

Here's how the higher-salaried PMs are doing it.

Why Product Managers fail to integrate AI-Agents into operations

While you read this, your junior PM just leapfrogged you, automating the entire PRD process; your CPO will be asking why you're behind, and most of your PMs are taking LLM outputs at face value.

Most product managers approach LLMs as they would hiring interns—perform sporadic tasks without a strategy or process integration.

“PMs are currently attending either a $2,500 AI bootcamp on Mavern or leaping straight into ChatGPT Plus, Claude Pro, and Perplexity subscriptions.”

Yet, they’re still drowning in manual work and retreat to spreadsheets because nobody thought to take a step back and ask, ‘Is subscribing to more LLMs accelerating product operations?’

Here's why this happens. They treat LLMs as if they are the AI Agents, and then watch YouTube tutorials on AI Agents and single-prompt questions—mediocrity at best.

To demonstrate their use of AI Agents, company leaders either establish a centralised AI division comprising data scientists or machine learning engineers, or assign the PM lead to designate "AI Agent champions" to run experiments, expecting to revolutionise operations while managing a full product backlog.

The failure pattern is predictable and triggered by the LLM sprawling:

Week 1-4: The Honeymoon Period: PMs discover ChatGPT, Perplexity, Claude, or Gemini and write user stories. Everyone's excited. Productivity seems to spike.

Weeks 5-12: The Sprawl - Different PMs adopt different LLMs. Tool stitching and integration begin; Claude writes PRDs in Squad A, while GPT-4 handles customer feedback in Squad B. Silos emerge without standards.

Weeks 13-20: The Retreat - Let’s put it this way. ‘Will you be able to answer why 87% of your AI Agents fail at week 13?’ (Hint: It won’t be the prompts letting you down.)

Meanwhile, your competitor has automated 60% of their product operations because they didn’t start with the LLM tools; they began with a professional intelligence system approach.

The real failure, though. Your post-mortem review won’t reveal whether you have created an AI-powered intelligence, because there wasn’t a broad transformation agenda from the business.

“Everyone believes that copy-paste prompts have been a success here, while overlooking the need to streamline workflows related to time management, knowledge discovery, consumption, and creation, allowing teammates to focus on their core expertise.”

Research from over 50 PM interviews reveals the truth: successful AI adoption requires three foundational elements working in concert. Miss one, and you're just playing with expensive LLM toys.

The AI-Agent Powered Product Operations Foundation

Recent PM conversations reveal they struggle with uncertainty when adapting AI to product operations. As Mind the Product notes, highly effective product operations succeed only when they act as strategic partners and treat processes as products.

In the case of AI Agents, we’re dealing with professional intelligence as a strategic partner and process, not a technology that makes things go faster.

But what does this look like for you?

Let’s consider a metaphor for a minute. In Star Trek: TNG – Elementary, Dear Data episode, the holodeck only works because it holds the complete Holmes library (knowledge). Ready, it springs to life from Geordi’s request (meta-prompt) to “create a villain capable of defeating Data.” It removes the manual work from Geordi (capacity) by managing every streetlamp, raindrop, and background conversation, freeing the crew to focus on solving the case.

This is the essence of AI-powered product operations, which give the product workforce the bandwidth for creativity.

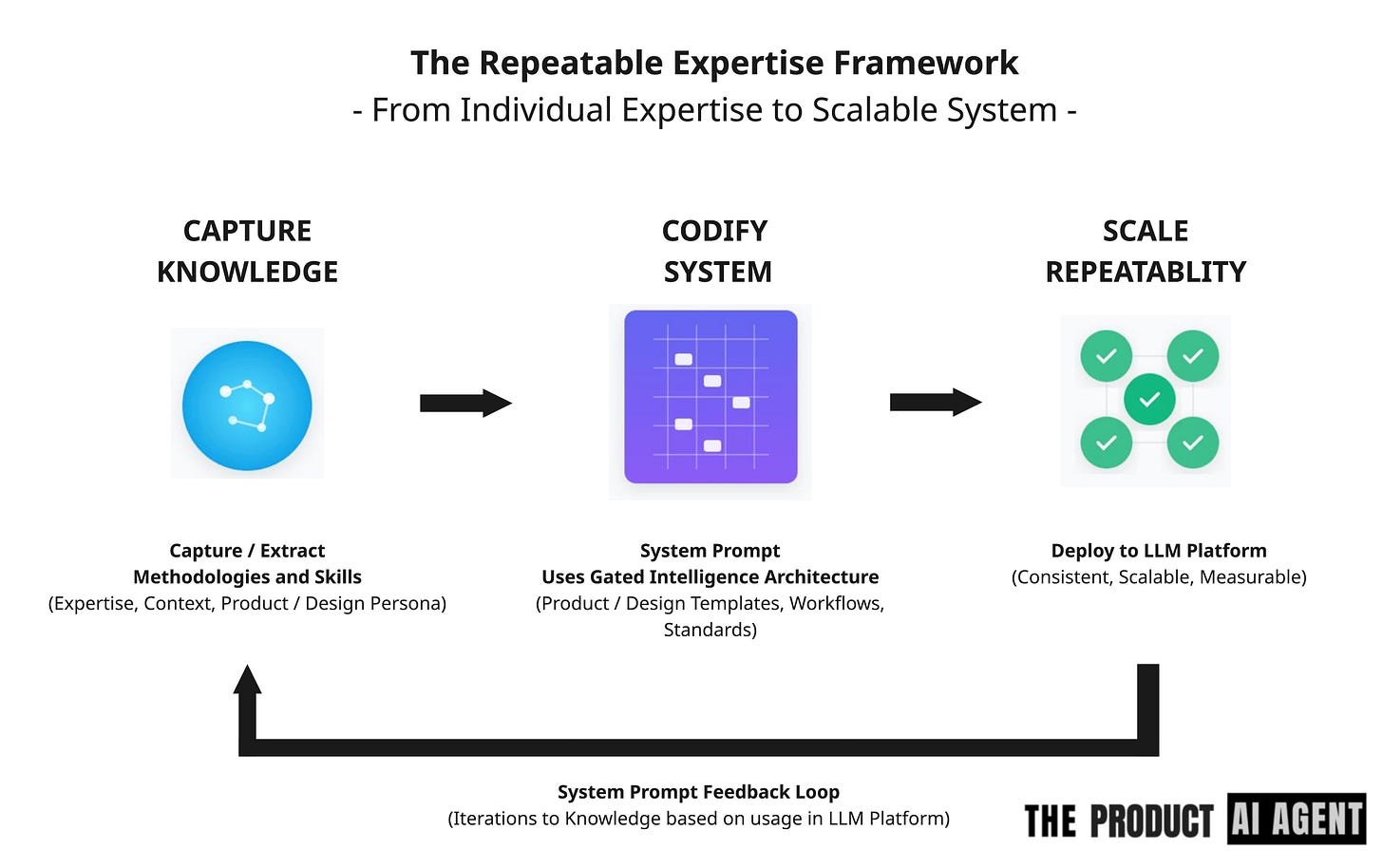

Capture Knowledge Foundation

Successful PMs build structured best-practice repositories to feed their AI systems. GitHub’s AI-READI Software Development Best Practices repository demonstrates how versioned libraries can serve as a persistent source of truth, and product managers who model their playbooks in this way will be the most effective.

Codify System Foundation

If you embed expert knowledge into structured prompts—defining roles, behaviours, and rules—this is the start of professional intelligence in operations. Like Figma’s design systems, these foundations ensure AI outputs remain consistent, brand-aligned, and contextually relevant.

Repetable Capacity Foundation

Let AI agents handle repetitive work—feedback synthesis, competitive scans, reporting, and routine documentation. This doesn’t replace you as a PM; it frees you to focus on strategy, creativity, and leadership. By removing 60% of the manual work, you gain the bandwidth to respond to unexpected challenges without burning out.

The ROI of AI Product Operations: Stripe and Figma

Stripe, the payments provider, avoided the trap of treating AI as a one-off tool by embedding it systematically across operations. Their success comes from building all three foundations of AI-powered product operations:

ROI: Stripe reports 57% more recovered recurring payments, higher authorisation rates, and reduced losses from fraud, direct financial outcomes that translate into measurable revenue gains.

Figma, the UX/UI prototype SaaS, advanced its approach by building “AI pairs” for every PM, also structured across the three foundations:

ROI: Figma now helps customers deliver prototypes faster, synthesises 3× more customer interviews into insights, and helps achieve consistent, brand-aligned delivery.

The lessons learnt here are that LLMs are not the starting point; an operating system that compounds returns when built across foundations is.

I reveal their methods in the playbook for AI Product Operations, along with the six critical prompts that drive their ROI—coming September!

Build your Operating System, not just AI Agents

While 65% of product teams using LLMs to reduce their time-to-insight by 70%, fewer than 20% have progressed beyond experimental AI Agent usage to a systematic transformation. McKinsey's research confirms this dysfunction.

“The successful 20% don't have better AI Agents, they have better professional intelligence operations.”

Your AI challenge isn’t about finding the perfect AI Agent. It’s about building the operating system underneath.

The product managers pulling ahead aren’t chasing shiny LLM features; they’re capturing knowledge, codifying expertise, and repeating capacity issues with a professional intelligence system so everyone has leverage from day one.

Day 1: Begin professional intelligence experiments today

If you’re starting out integrating LLMs into product operations, focus on one high-impact process that drains your time. Customer feedback synthesis is often a common culprit — it’s constant, siloed, and critical to the direction of product strategy.

Appoint a process owner and ask them to craft a system prompt tailored to your process drain, then run it against historical inputs.

Within three weeks, you’ll have a clear delta between manual and AI-assisted work, your first business case for scaling.

The product managers building foundation AI operations today are already shipping their third AI-accelerated process, while others are still debating LLM selection.

By systematising intelligence, you create a new strategic moat for you. Those who don’t will burn cycles reinventing the wheel.

So, do you want to manually stitch workflows or run on an operating system designed to scale professional intelligence?

Stay tuned for my newsletter next week, which will cover AI product operations.

Download The AI Product Operations Playbook

A playbook to help you identify how to change your product operations with LLMs and your playbooks for building products.

The AI Product Operations Playbook, not only reveals the six prompts (I couldn't share here), but also incorporates feedback from lessons learnt when building Templonix - an agentic AI enterprise solution.

It includes a blueprint for delivering LLMs across processes and people, creating a scalable methodology within your company.