This 2-Day design workshop prevented a $Million AI project failure.

Why smart product managers ask their design teams to start with agent experience—not engineering—if they want AI to deliver ROI for executives.

A few weeks ago, a product director at a B2B SaaS company reached out.

Their team had just shipped what they thought was an AI agent.

It looked impressive until the CFO asked one question:

“What’s the ROI?”

What they’d built wasn’t an agent.

It was a chatbot stitched to a spreadsheet, a stateless LLM (which doesn’t even reason), prompt-driven, and disconnected from real business value.

It couldn’t remember.

It couldn’t reason.

It couldn’t act across tasks.

It looked smart. But it couldn’t deliver outcomes.

The problem wasn’t engineering. It was missing the system design.

They skipped the one step that matters most: designing how an agent cognitively thinks with human oversight.

Burned budget. Lost trust. No answers.

Sounds familiar?

Tuck in to learn how a 2-day workshop prevented this project from spiralling in the $millions.

🧨 The real reason AI projects fail

Most AI failures aren’t caused by bad models or poor execution. They fail because teams confuse shipping a prototype with designing a production system around an LLM.

It’s easy to get excited after wiring up an LLM, a chat interface, and a prompt chain.

It feels like progress.

It demos well.

It doesn’t scale.

But more importantly, it’s not AI, and won’t deliver what the business needs.

Here’s the uncomfortable truth about creating AI Agents:

An agent isn’t a chatbot.

It’s not a clever prompt in a low-code / no-code tool, and

It’s not a wrapper for LLM models (e.g., ChatGPT, Claude, etc) with a nice UI.

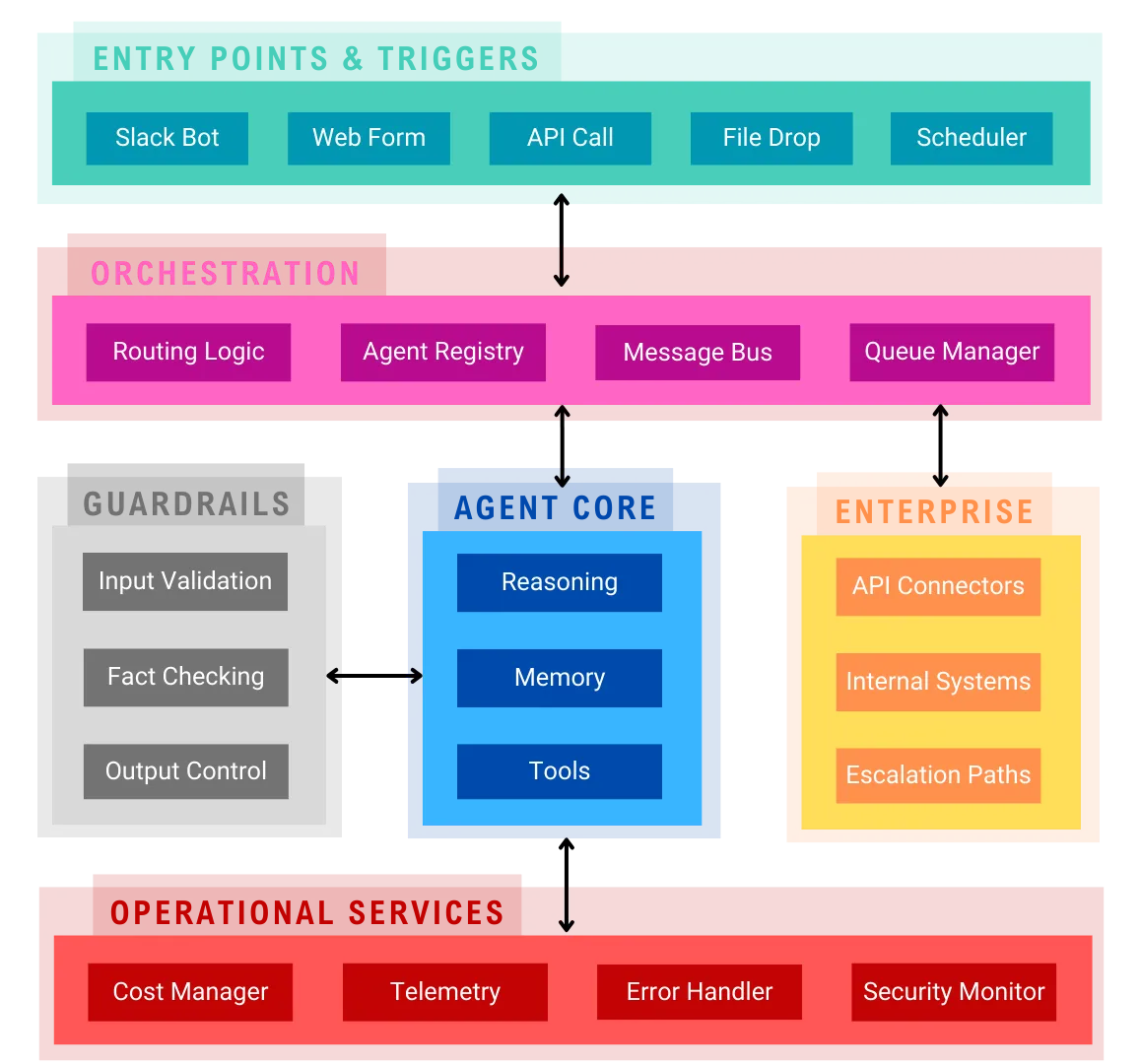

A real AI agent is a production-grade system designed to perform work autonomously, safely, and repeatedly.

It must:

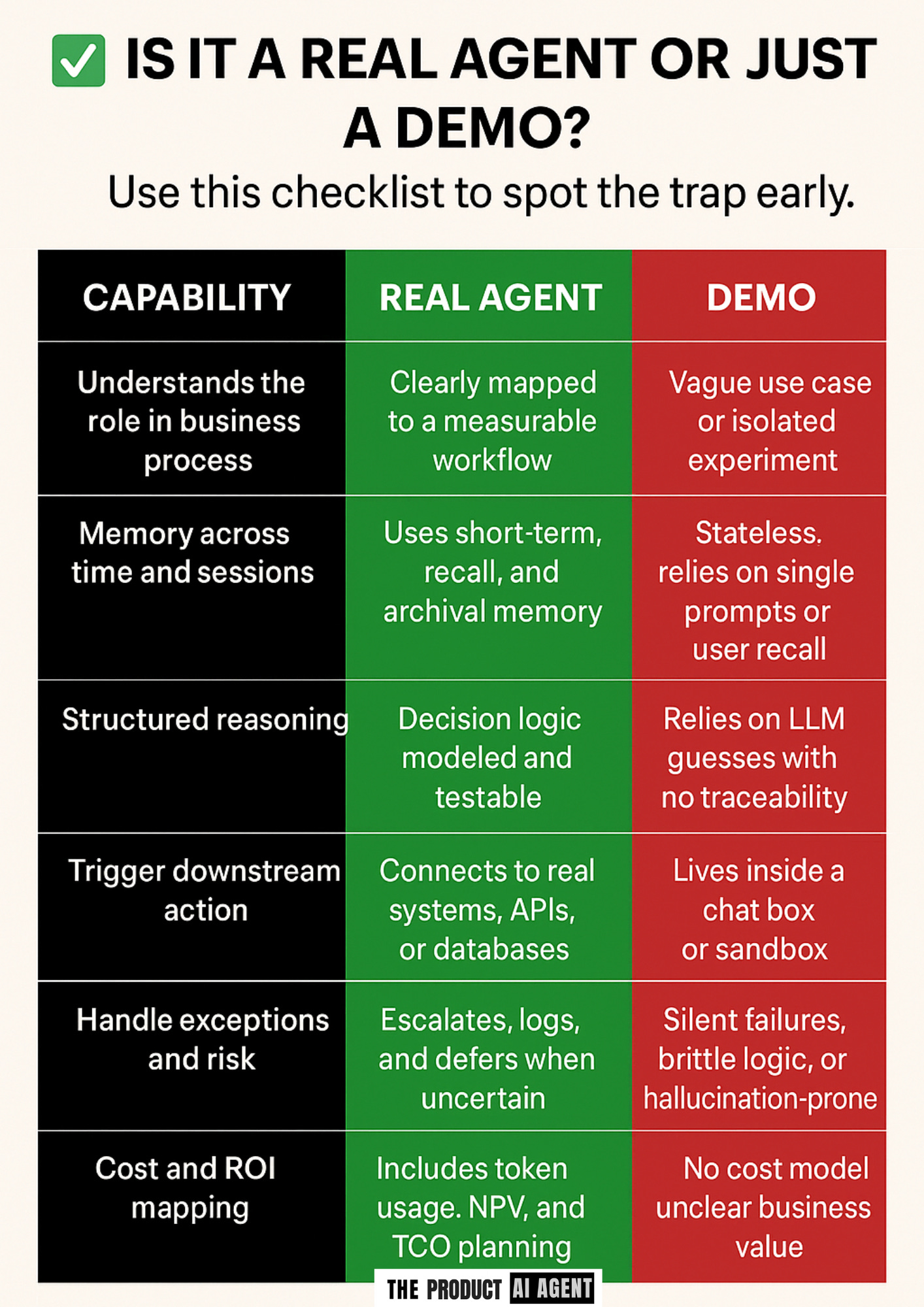

Understand its role in a business process.

Access memory across time and context.

Make decisions using structured logic.

Trigger downstream actions with traceability.

Escalate when confidence is low or risk is high.

Guess where the LLM is?

That’s right, it’s not the centre of the agent, it’s linked with an API connector.

If your agent can’t remember what it did yesterday, can’t explain why it made a decision, and can’t complete a task without a human watching……

It’s not an agent.

It’s a demo using an LLM.

✅ Is it an agent or just a demo?

Use this checklist to find out before it's too late:

If you're checking more boxes on the right than the left, you're not building a system…..

….. you're burning a budget.

🎯 UX isn’t dying, it’s becoming infrastructure

There’s a lot of noise about AI replacing designers. And yes, UI is shrinking.

Interfaces are dissolving into chat, voice, and automation.

But design isn’t dying. It’s moving deeper into the system.

UX is evolving into AX: Agent Experience.

In the world of AI agents, the job isn’t to design screens. It’s to design how systems reason, act, and improve over time.

Agent Experience means:

Crafting both system and user prompts that drive clarity

Mapping memory architecture across sessions, tasks, and history

Structuring decision logic with fallback paths and escalation triggers

Designing trust, not just touchpoints

Working with product, data, and ops from day one

This is infrastructure-level design—shaping cognition, not clicks.

“The interface is disappearing. What replaces it is agent behaviour, and that’s what needs to be designed.”

Designers who embrace AX will lead in any AI product strategy. Those who stay in UI design tools will be spectators.

The starting point?

Designing the system behind the agent. That’s precisely what the Agent Signal Workshop was built for.

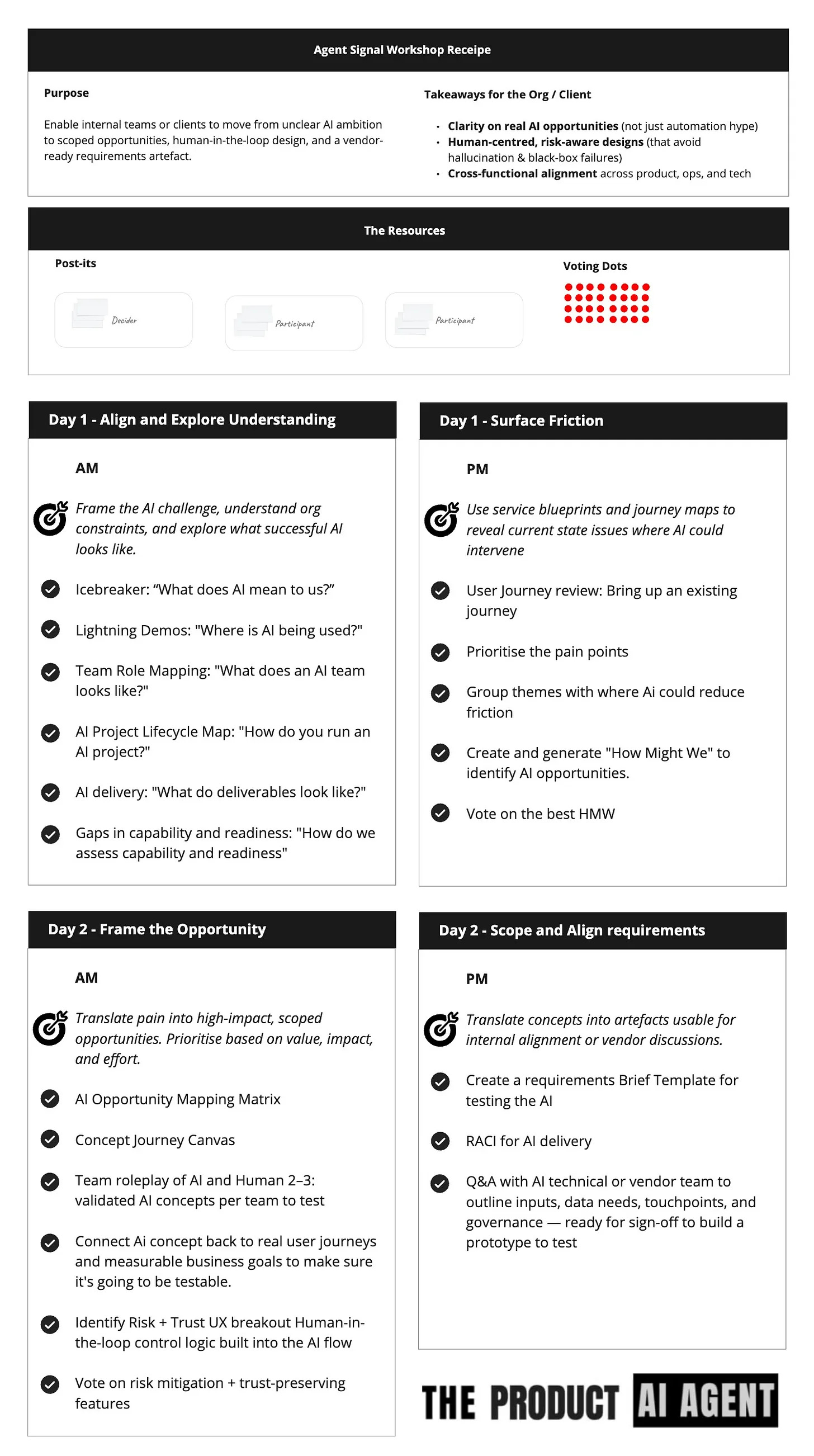

🛠️ What is Agent Signal, and why does it work

Agent Signal is a 2-day, high-impact sprint explicitly built for modern AI development.

It helps teams align on the fundamental foundations of an agentic AI system before a single line of code is written.

No fluff.

No endless decks.

Just structured clarity that moves fast.

In two days, teams will:

Define what the agent owns in the business

Map repeatable expertise into usable memory flows

Design governance and escalation rules

Align product, design, data, ops, and legal around one system blueprint

Most AI projects stall because no one agrees on what the agent does, how it makes decisions, or what success even looks like.

Agent Signal front-loads those decisions, so execution is clean, fast, and fundable.

It replaces confusion with clarity, helping teams transition from slide decks to scalable systems without burning months of development time, budget, or trust.

🎁 Want the workshop kit?

Here’s the Agent Signal recipe, so you can start with your team immediately.

Want the interactive Miro board we use in real client sessions?

Pop comment or send a chat, and we will upload it for you.

💬 Found this helpful?

Share your thoughts, ask questions, or challenge the thinking in the comments.

Thanks for 🔁 Restacking and sharing.