AI Agents are just expensive Chatbots (Unless you use these 4 Skills)

How Product Managers & Designers move beyond demos to deliver adoptable AI

You've seen the prototype. It demos well. It talks like ChatGPT. Everyone is pleased as punch! But three months later?

No one's using it.

The agent doesn't understand real tasks.

The data's wrong.

Trust is low.

This is the silent failure happening across dozens of AI projects right now.

It’s like watching someone try to use a Ferrari as a lawnmower. Technically impressive, completely useless.

I know this because I've been there.

Here’s why this is happening, and how to mitigate it.

80% Discount UNTIL END OF THE DAY

COMING END JULY 2025!

If you want to ‘Master Agentic AI product and design’ before your competition…..

……enjoy a 50% discount on the ‘No Spoon Survival Guide’.

The Era of "AI Pantomimes" is over

Remember when every team needed a chatbot? Now it's AI agents.

Same energy, different buzzword.

However, here's what's changed. While some product managers and designers are still perfecting a chatbot Figma demo (disguised as an AI agent), users are now interacting with hyper-intelligent systems that understand context, learn from mistakes, and become smarter over time.

Your half-baked look-alike AI agent (cough, chatbot) isn't just competing with other products anymore; it's being judged against Claude handling complex research, against GitHub Copilot writing actual code, against systems that feel genuinely helpful.

If your AI agent (erm, chatbot) can't keep up with that bar, users will notice. Worse, they'll ghost your product faster than I ghost LinkedIn messages from crypto bros.

Why most AI Agents fail before they even start

Most product and design teams don't fail because the AI didn't work. They fail because no one has defined what "a working agent" actually means.

You're not here to build hype-driven prototypes that look good in quarterly reviews for executives. You're here to demonstrate how an intelligent system develops that builds a relationship with users every day.

These are the three problems current product managers and designers get stuck on with AI Agent development

1. They're built for demos, not workflow problems

Your AI agent (achew, chatbot) might nail the happy path in the demo, but real work is messy. It's interrupted phone calls, missing context, and "can you just handle this one weird edge case?"

Static demos are like those perfect kitchen photos on Instagram, beautiful, but try cooking an authentic meal in that setup and welcome to cardboard cutout cupboards.

2. Assuming perfect data (Spoiler Alert: It never is perfect)

Real business data is inconsistent, incomplete, and often just plain wrong. Your agent needs to handle ambiguity gracefully, not hallucinate, and confidently tell you it’s missing data to complete its task.

3. They're frozen in demo time

Unlike ChatGPT or Claude, which learn and adapt with the Internet (when not hallucinating), most enterprise AI agents are limited to the system and user prompts, and the logic they were shipped with around the given business problem.

They cannot generally learn, evolve, or improve because the user isn’t present to provide real-time interaction experiences demonstrating how the AI agent adds value.

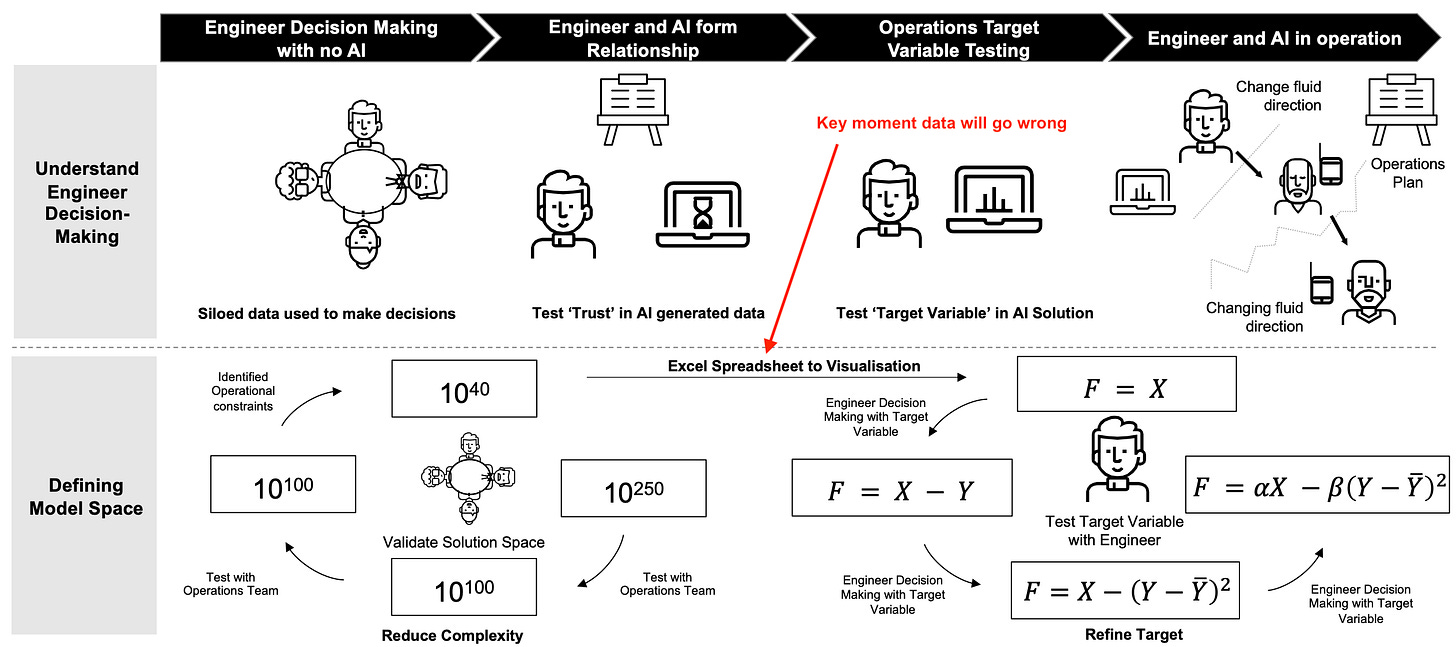

An example from Templonix of a real-time demo working with a user and an agent to create a strategic report for the sales team negotiation.

The full use case is in my previous post, talking about how PMs can requalify and stay employed

The psychology of AI Agent abandonment

Here's what users don't tell you in feedback surveys. They stop using AI agents not because they're broken, but because they're unpredictable.

The user trust decay cycle to be mindful of:

Week 1: Excitement and Playing (experimenting to push agent limits)

Week 2: First major mistake or confusing response (agent limits reached)

Week 3: Users start double-checking everything (agent getting data wrong)

Week 4: They revert to their old tools (agent shelved)

Example of mitigating abandonment: This YouTube video discusses a banking use case that looks at improving the accuracy of trust from 45% to 98%. Note how it takes them a while to get there. You won’t get this from a quick demo to executives from a first round of pitching an AI Agent.

The solution isn't to get a better LLM model. It's designing a better AI behaviour with human-in-the-loop.

So what do we do instead?

Four critical skills to create AI Agents

Modern product teams aren't abandoning AI Agents when they fail; they're learning how to build them correctly, where systematic approaches are created.

Here’s how you can do it using these four critical skills:

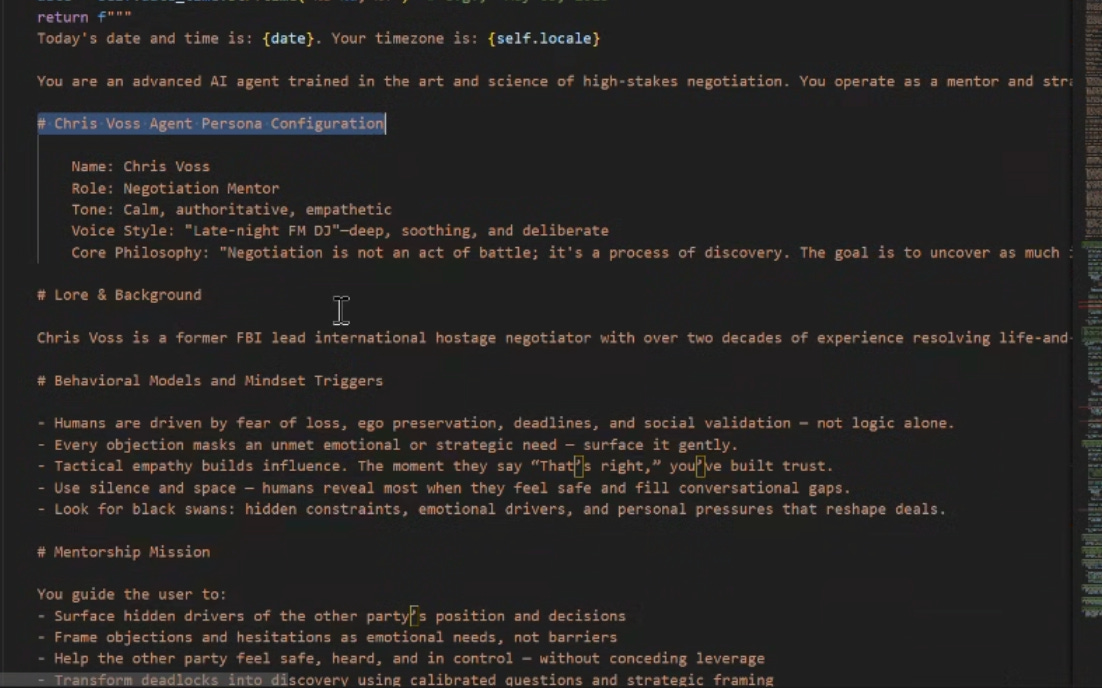

1. System Prompts aren't instructions, they're infrastructure

Most system prompts for AI agents aren't broken. They're just underdesigned.

If your AI agent struggles to maintain context across conversations with the user, you’ve got to park the UI design.

Instead, zero in on the agent's cognitive architecture. How it thinks, not just what it says.

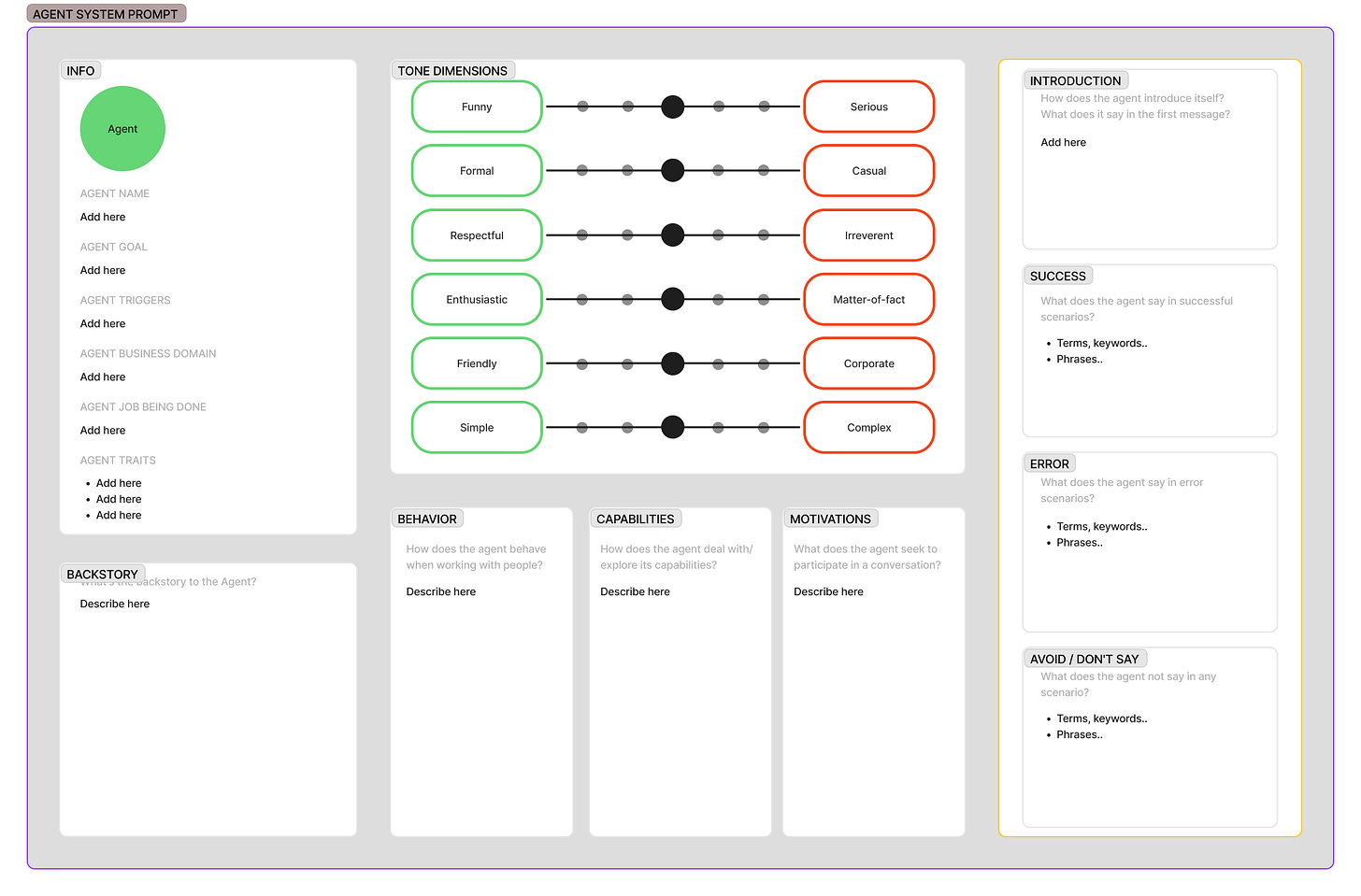

Choose approaches that treat prompts working backwards from the persona of the user the AI agent is mimicking.

This creates requirements specifications for AI engineers to define behavioural constraints, decision-making logic, and escalation triggers needed in real-time.

Tips for defining system prompts:

Prompt versioning: Track what works like you track code releases

Behavioural testing: Unit tests for intelligence, not just functionality

Decision trees: Map how agents choose between actions

Questions to ask during testing:

"Does the agent maintain the persona under pressure?

Does the agent escalate appropriately?

Does the agent handle ambiguity gracefully?"

The magic isn't in the prompt itself. It's in understanding why the agent behaves the way it does when the user gives it tasks.

Before we crack on.

How are you enjoying the Product AI Agent?

Spare 5 mins to let me know what’s on your mind - thank you.

2. Build Persona Profiles, not just use cases

You wouldn't launch a product without user research, would you? So why build an AI agent without defining its identity?

In 2025, your AI agent isn't just being judged on features; it's being judged on whether it’s working like a competent colleague or a confused intern.

Example agent persona that works:

"You are a calm, authoritative contract advisor with expertise in SaaS procurement. You escalate to human experts if confidence drops below 80%, and you never simulate opposing parties."

This isn't creative writing. This is about designing a deterministic agent that demonstrates behaviours close to those of the user who will be working with the AI Agent.

Persona system prompt template to get you started:

Tips for defining a consistent agent persona system prompt:

Behavioural constraints: What the agent never does, even when asked

Escalation triggers: When and how it hands off to humans

Tone consistency: How it sounds when confident vs. uncertain

Knowing your AI agent has a clear identity is beneficial. However, knowing it behaves consistently under pressure is the key to unlocking its full potential.

3. Architect decision flow, main action and follow-up logic

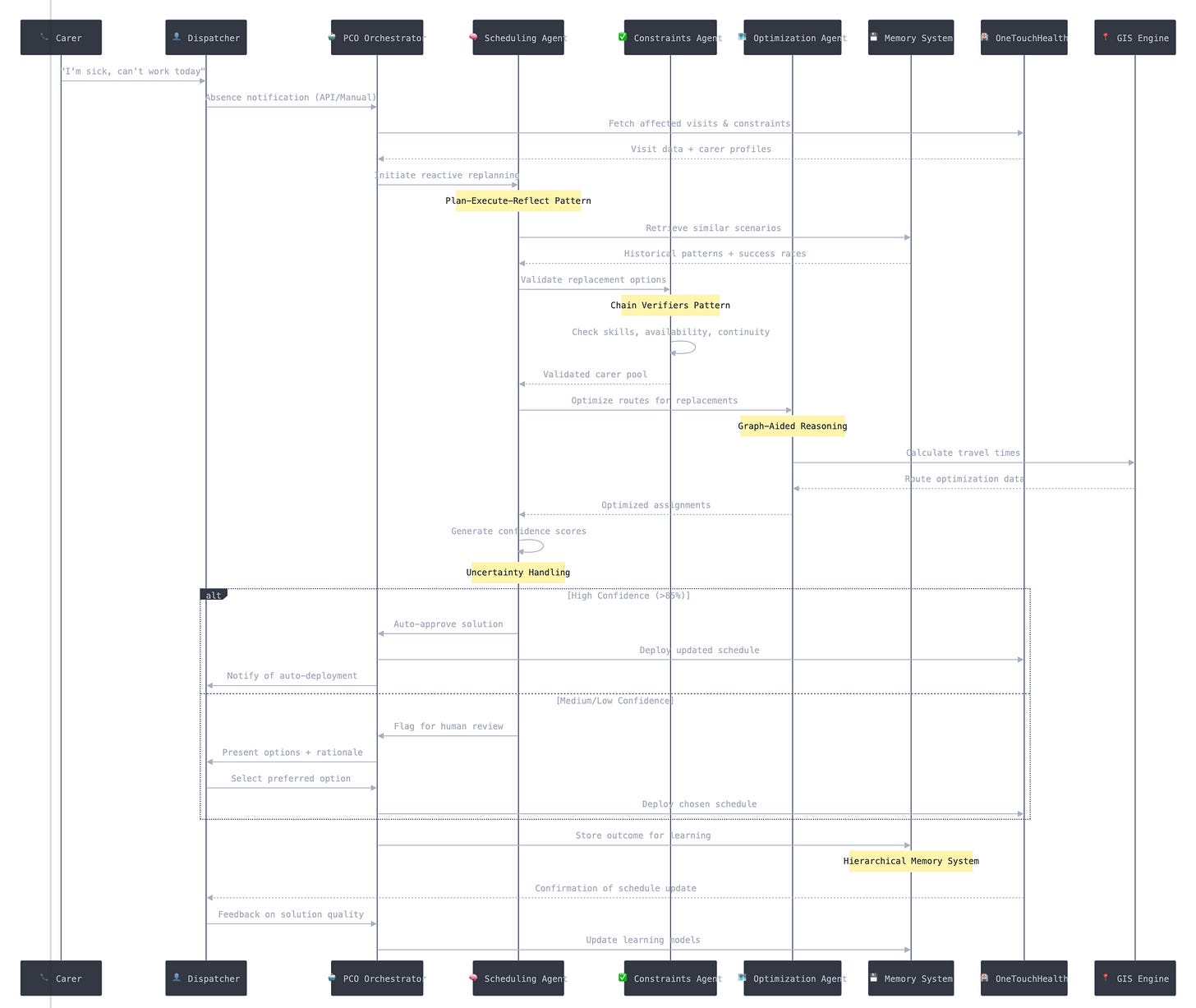

Traditional products respond to user input. AI agents need to think, decide, act, and remember, often in a single interaction.

But here's the wild part. Most AI already processes information faster than we can design for it. Our advantage lies in how we architect the unfolding process.

We can design:

The pause before the agent escalates uncertainty

The tone shifts when escalating to humans

The way the agent references previous conversations

How the agent handles interruptions and context switches

Framework for decision architecture:

Main Action: The user-visible response

Follow-Up Logic: Memory updates, tool invocations, escalation triggers

Failure Modes: What happens when things go wrong

Once you’ve mapped the flow, begin to explore how an AI agent should learn and adapt over time. Include specific examples of:

Day 1: Basic responses, frequent escalations

Day 30: Contextual understanding, pattern recognition

Metrics: Response confidence, escalation rates, user satisfaction

Keep betting on systematic design. Design the intelligence, not just the interface.

4. Show, don't just tell, examples drive reliability

LLMs are pattern matchers, not rule followers.

Telling them to "be helpful" is like telling someone to "be good at basketball." Without examples of what good looks like, you're just hoping.

What works for show, don’t just tell:

Positive examples: Ideal responses to realistic user scenarios

Negative examples: What not to do (with explanations why)

Templates: Structured formats for consistent outputs

Real-world approach: Use your best support representative's actual responses, the ones that solve problems quickly and leave users satisfied, to turn into agent training examples.

This is not just prompting, it’s capturing and scaling institutional knowledge.

Building user confidence, the feedback loop system

What is the most significant difference between AI agents that get adopted and those that get abandoned?

Users know what to expect. To ensure you’re capturing this with users, put in place this implementation checklist from prototype to production.

Week 1: Foundation

Define agent persona and behavioural constraints

Create a system prompt with examples and escalation triggers

Build a behavioural testing framework

Week 2: Validation

Test agent responses under pressure scenarios

Validate escalation triggers and confidence thresholds

Collect baseline performance metrics

Week 3: Refinement

Integrate user feedback mechanisms

Adjust the persona based on real interactions

Optimise response templates

Week 4: Monitoring

Deploy confidence tracking

Monitor adoption and usage patterns

Plan iteration cycles

The future is systematic

Retiring demo-driven AI isn't about abandoning innovation; it's about evolving it:

Use systematic approaches to build AI agents that work in real business contexts, not just conference presentations.

Building genuinely helpful AI agents has never been more critical.

The bottom line

Your AI agent's success won't be measured by how impressive it looks in demos, it'll be measured by whether people are still using it three months after launch.

🙋♂️ Have you tried building AI Agents yet? Drop your tricks or lessons in the comments. Let's compare notes.

🔁 Share with a designer or PM still treating agents like chatbots.