Part 4 - 5 ways product leaders can WoW their C-Suite with the revenue impact of AI Agents

How product leaders can avoid these common mistakes when asking for budget investment.

AI agents are no longer speculative—they’re strategic. Yet many product leaders still struggle to translate AI’s potential into the language the C-suite speaks: revenue, operating leverage, and margin expansion.

Too often, agent proposals focus on features or technical capabilities. But executives don’t fund automation. They fund results.

This article gives you five strategic methods to pitch AI agents without falling into ROI theatre.

You'll learn what:

Quantifying revenue outcomes look like

Forecasting cost and break-even timelines are

Building trust through transparency is

Framing agents as operational assets are

These methods will help you land your case precisely when preparing to secure funding for an AI agent project.

Let’s dive in.

What does it take to budget for an AI Agent?

Before any C-suite investment conversation begins, product leaders must align their budget modelling with reality:

“AI agents are not a software feature—they’re full-stack systems that combine infrastructure, human cognition, and operational trust.”

That means cost modelling cannot be done in a silo.

Co-developed with an AI programme manager, this is the person who understands the end-to-end architecture costs of an agent:

Sovereignty costs

Subscription fees

LLM consumption across environments

Compute, vector, and ephemeral storage

Resource design and build

Without understanding all of this, token overrun, latency issues, and runaway inference costs will cripple the project before ROI is ever realised.

From a product ownership perspective, these will be included in the design and build resource cost. The budget ask will account for the human architecture required to make the agent intelligent, trusted, and aligned with user needs and business outcomes.

At a minimum, this includes:

An Information Architect: For cognitive and prompt design, they support the design of the memory schemas, crafts deterministic system prompts, defines chunking logic for knowledge ingestion, and writes fallback/escalation language if the agent needs help from a human.

A Product Manager: They oversee the end-to-end agent lifecycle, execute use cases, work with SMEs to extract repeatable expertise, and lead the design → build → test → deploy loop.

Product leaders who bring a financial model tied to roles, run costs, and outcome metrics don’t just get approval — they get confidence. From the C-suite. From IT. From the teams who will benefit every day from an agent that actually works.

So, what separates top-performing product leaders from the rest in their ability to shift the conversation from "what the agent can do" to "what the agent will return?"

Let’s find out.

1. Replace ROI theatre with full-funnel revenue

“We’ll save time” or “this will automate tasks”

One of the most common missteps in AI agent investment cases is over-indexing on generic productivity gains.

They’re not compelling.

They’re vague.

They miss the point.

They don’t resonate in the boardroom.

Executives care about financial velocity—how fast capital turns into cash. Your job is to show how AI agents compress time cycles, expand margins, or grow customer lifetime value.

Where to start:

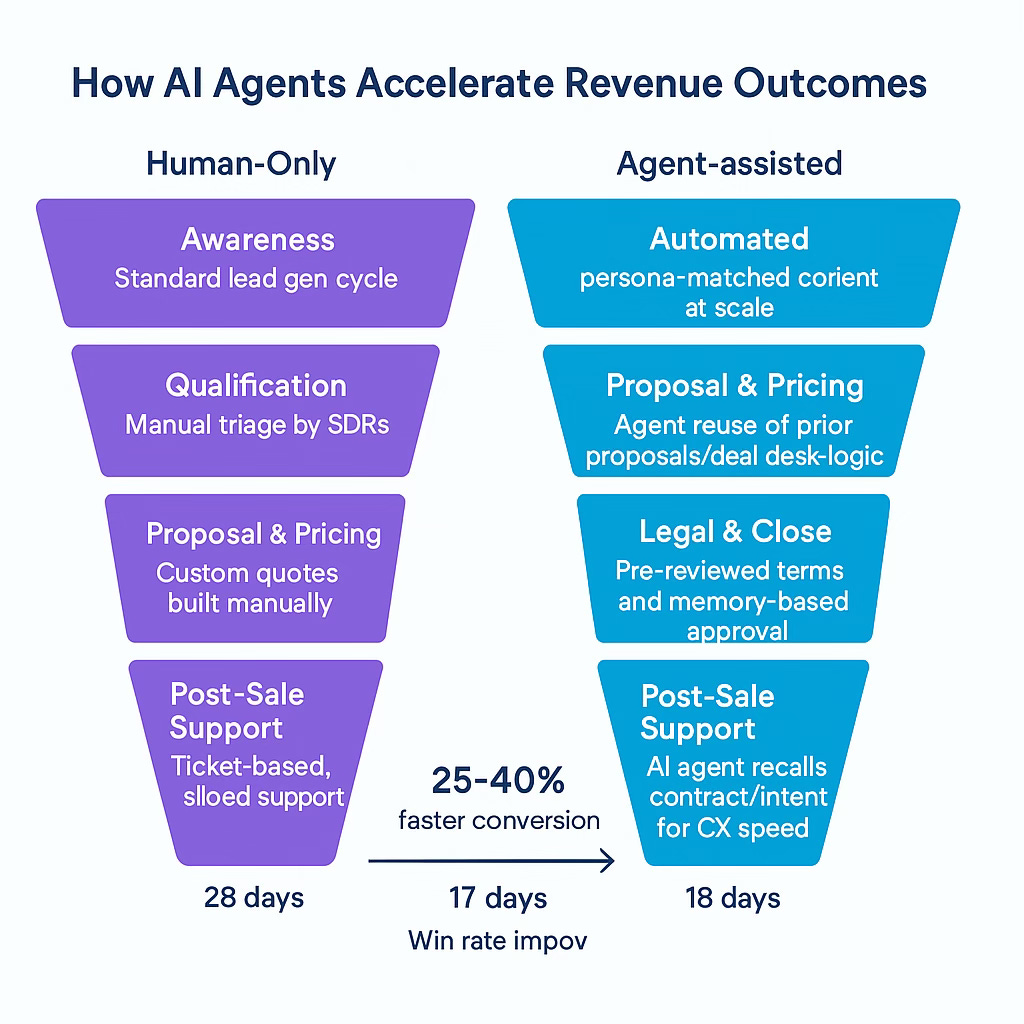

Map the commercial funnel. For example, a SaaS sales manager touches on pre-sale discovery, engagement, post-sale onboarding, and support.

Insert the agent — Identify stages where an agent can inject expertise and speed.

Quantify the lift. Sales enablement agents, for example, reduce pricing delays by retrieving past deal data, surfacing playbooks, or auto-drafting proposals.

Storylines work well with executives as they remove hypothetical benefits, focus on the operational job being done, and the costs associated with margin expansion and velocity.

A ‘Sales Enablement Agent’ needs to be trained on previous deal desk decisions, objections, and pricing playbooks so that they can guide account executives during live calls.

Instead of stalling to “check with Finance,” the agent can retrieve precedent cases, draft counterproposals, or auto-generate terms—all in context.

In our work with go-to-market teams, we’ve seen a reduction in deal cycle times by 25–40%, which has a direct impact on pipeline conversion and revenue predictability.

A funnel graphic with “before and after” metrics (time to close, proposal approval latency, rep ramp-up) can become your strongest revenue argument.

When these kinds of numbers are presented visually, they cut through theoretical ROI and demonstrate how the agent is embedded in the cadence of everyday business.

2. Treat your agent like a business unit asset

AI agents aren’t just features. They operate autonomously, impact workflows, and require oversight.

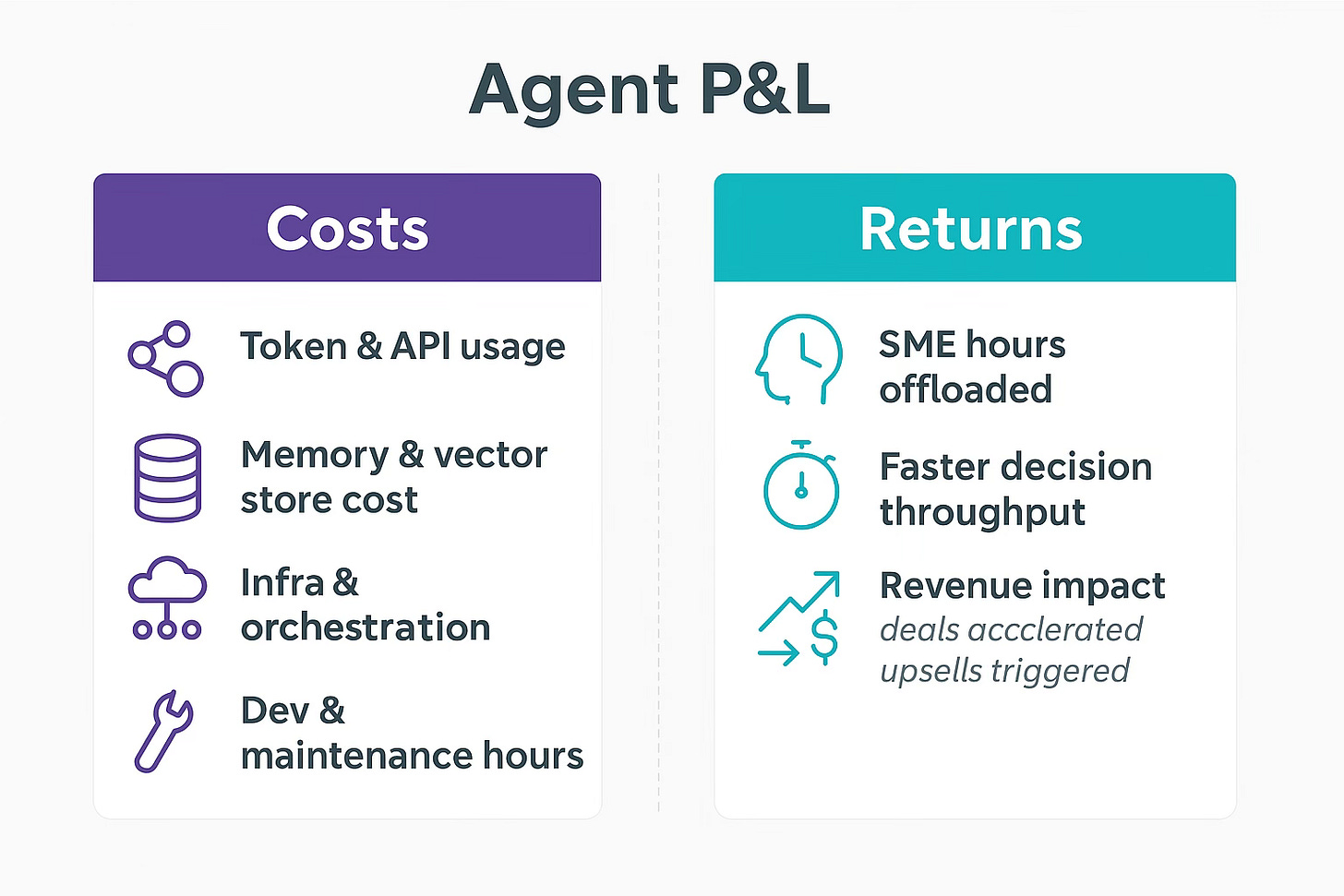

“You’re not building a tool—you’re building a micro-business inside the company with a miniature P&L.”

Cost modelling

AI agents need to be fundable like any other asset needing a forecastable return. Understanding the calculation of the Total Cost of Ownership (TCO) with token usage, API calls, memory storage, model retraining, and engineering time over a 12–36 month window is essential to know what costs are needed to run, adapt, and support the agent.

Outcome modelling

A product manager should be interested in the return side. Quantifying how many hours are offloaded from subject matter experts (SMEs), how many decisions are made faster, and where revenue capacity expands supports conversations around how the agent contributes to ARR.

Let’s consider our sales enablement agent vs the sales manager; we answer questions such as:

Are sales deals closing quicker?

Are compliance reviews being performed more consistently?

Is onboarding time able to be cut in half?

A well-designed agent model shows costs and returns, conservatively, and on a timeline. Framing the agent as a productive asset with ROI doesn’t treat it as a software add-on.

3. Show the break-even point, visually

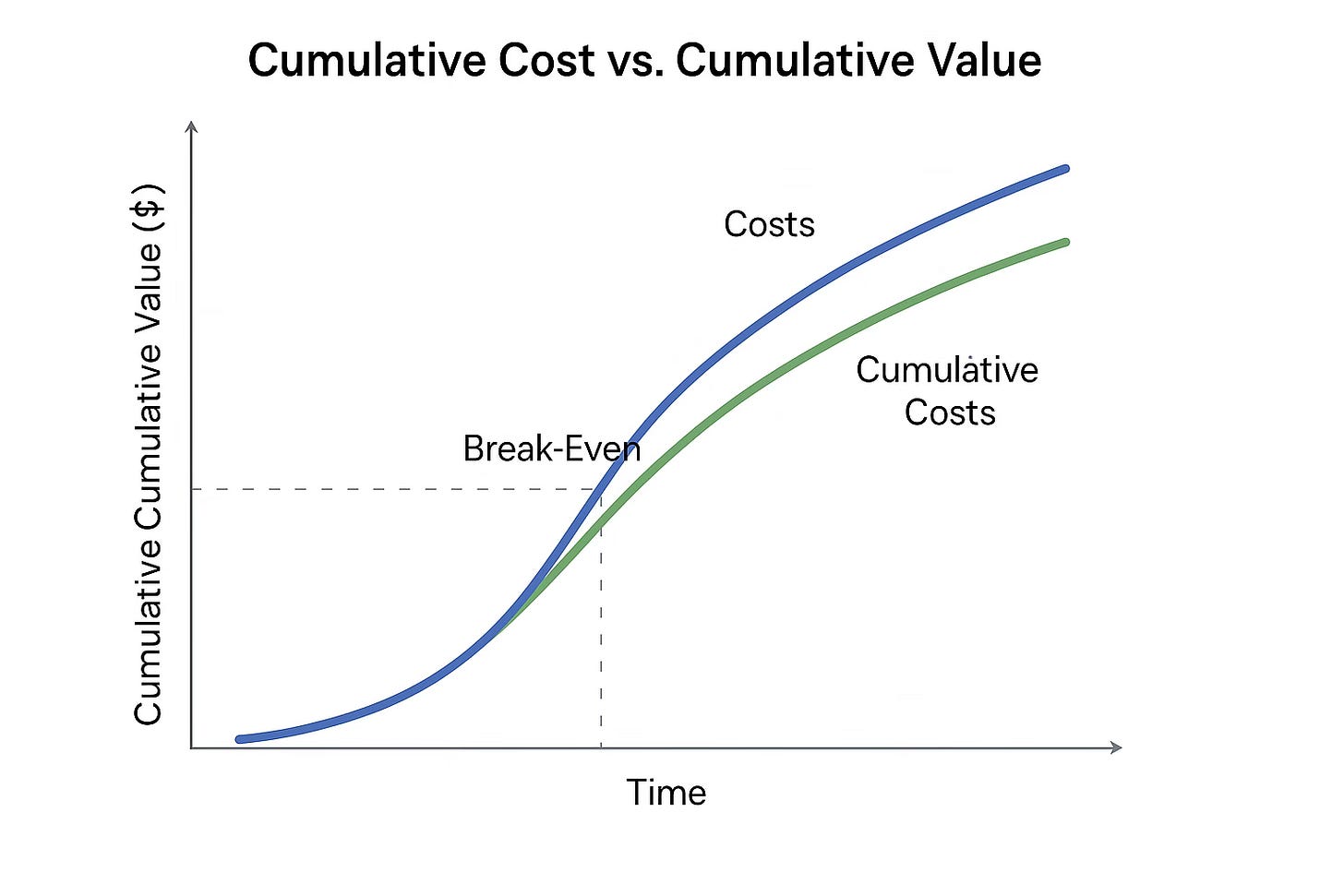

Many AI agent proposals will fail, not because the solution lacks merit, but because the value timeline is unclear.

Executives want timelines. They live in charts and tables most days, so if handed a token latency, prompt speed, or embedding quality, it’s unlikely to answer–when do we get our money back?

Translating AI agent investment into financial timelines creates clarity, instead of relying on abstract efficiency metrics.

Cash flow = what goes out + what comes back

The recommended approach is to model Net Present Value (NPV) over a defined investment horizon (typically 24–36 months) and calculate the Internal Rate of Return (IRR).

This will elevate your position and benchmark the agent against other capex or digital transformation projects.

Let’s consider our sales manager example:

Total investment of $180,000 over two years, covering infrastructure, development, prompt engineering, and retraining cycles.

Time savings from SME task offload are projected at 3,600 hours annually.

Result: Break-even achieved by month 10, with a 3-year IRR of 72%.

This structure allows the AI agent to be compared with traditional investment vehicles, not just experimental tools.

4. Account for labour and oversight costs

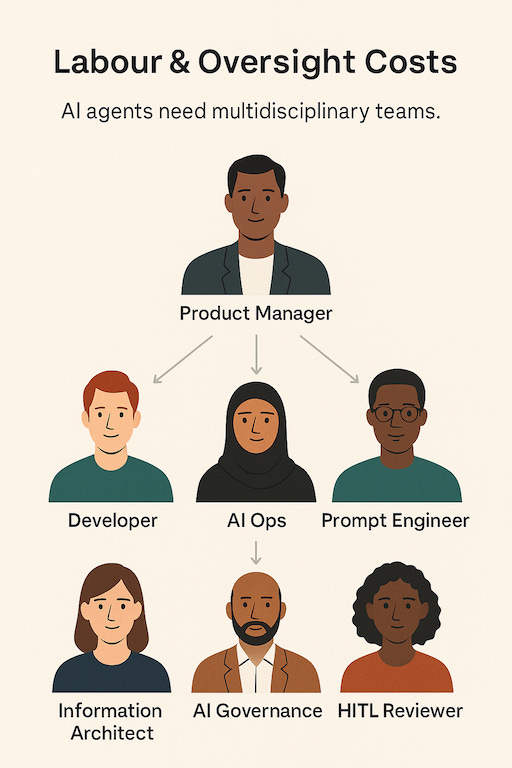

Every agent has a team behind it. If you skip human governance in your budget, your rollout will be fragile.

Behind every reliable, production-ready agent is a multidisciplinary team working across the lifecycle—from design to deployment to daily operations.

The team supporting an AI agent

Each agent must be developed, maintained, and governed by a team with distinct roles:

AI Engineers - handle the build, fine-tuning, and deployment of agent models.

Prompt Engineers - focus on designing robust prompts that anchor reasoning, ensure identity consistency, and minimise hallucinations.

Information Architects - structure information for requirements to iterate the memory systems—core, recall, and archival. They iterate with the SME to discover the knowledge.

AI Ops - teams ensure uptime, manage drift, track usage, and implement automated escalation paths for failure scenarios.

Human-in-the-Loop (HITL) Quality Assurance Reviewers - step in when ambiguity or edge cases demand expert judgment.

AI Governance Leads - ensure explainability and compliance are not afterthoughts. They audit decisions, handle traceability requests, and define policy boundaries (guardrails) the agent must obey.

As agents increase in complexity, labour requirements scale with them. This human governance layer is not overhead—it’s integral to making the system safe, reliable, and explainable.

Staffing needs must be factored in early and explicitly when forecasting agent budgets. Failing to do so leads to underfunded rollouts and operational fragility.

5. Explainability as an investment filter

The best AI systems won’t win approval if they’re black boxes.

Trust—and accuracy—are the investment gate.

Design for auditability

Even the most accurate system will struggle to secure funding if its decision-making is opaque. Executives need assurance that the agent's users know what it decides, how it arrived at that decision, and what happens when the answer isn’t apparent.

“The goal is not just transparency, but auditability.”

This is where explainability becomes more than a technical nice-to-have. It becomes a financial and regulatory safeguard. Agentic systems must be able to surface their reasoning trails—whether that’s a memory citation, a series of tool calls, or a Tree of Thought (ToT) branch.

Visual aid

Agents designed with explainability at their core give organisations the confidence to deploy them in high-stakes environments. These systems should be able to articulate when they are confident, uncertain, and when human intervention is required.

This includes the ability to visually:

Reference the specific memory or data point used

Surface the reasoning logic behind a decision

Escalate to a human-in-the-loop reviewer when risk thresholds are exceeded.

“C-suite decision-makers are not looking for perfection—they are looking for defensibility. “

The most investable agents are those that make it easy to trace errors, explain exceptions, and improve with feedback over time.

Next Steps: The budget checklist

To secure funding for your AI agent, speak in executive fluency:

Quantify the Revenue Lift – Time saved is secondary. Show sales acceleration, margin expansion, or CX gains.

Model Full Costs – Include design, prompts, memory, and oversight.

Use NPV/IRR – Show break-even and long-term ROI.

Present the Team – Show who builds, checks, and governs.

Anchor Trust in Memory – Explain how SME knowledge is governed and reused.

Make Transparency the Differentiator – Build for audit, not mystery.

Final thoughts: AI agents are boardroom assets, not lab toys

AI agents are not experiments. They’re enterprise operating systems.

Done right, they won’t just replace tasks—they’ll change how companies think, decide, and scale.

The best product leaders will not just ship agents. They will fund, defend, and grow them like businesses.

💬 Found this helpful? Share your thoughts, ask questions, or challenge the thinking in the comments.

Thanks for 🔁 Restacking and sharing.